In this tutorial, you will learn how to make your own custom datasets and dataloaders in PyTorch. For this, we will be using the Dataset class of PyTorch.

Introduction

When carrying out any machine learning project, data is one of the most important aspects. Preparing, cleaning and preprocessing, and loading the data into a very usable format takes a lot of time and resources.

In deep learning, you must have loaded the MNIST, or Fashion MNIST, or maybe CIFAR10 dataset from the dataset classes provided by your deep learning framework of choice. It already comes in a very usable format and you just have to use the transforms before feeding it to your neural network. But in real life, you may get data in a totally different format from what you can actually feed to a neural network.

So, in this article, we will get hands-on experience on how to prepare our own datasets and iterable dataloaders from the data that we have at hand.

- What Will We Cover in this Article?

- How to use the PyTorch

Datasetclass? - How to write class modules to prepare our dataset?

- How to make iterable dataloader from our custom dataset?

- How to use the PyTorch

Get the Data for This Article

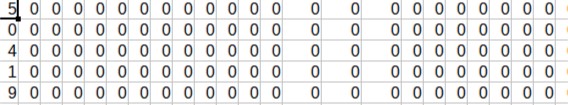

In this article, we will use the CSV file format of the MNIST dataset. I have chosen the MNIST data as many people will already be familiar with the data. But then again, most would have used it by loading from the deep learning dataset classes directly. So, we will work our way up with the CSV files and learn everything eventually. Starting from loading the data, to iterable and trainable dataloader format.

You can get the training and test the CSV versions of the MNIST from the following two links (link to the website).

Train.csv

Test.csv

In the above two CSV files, train.csv contains 60000 samples, and test.csv contains 10000 samples. So, 70000 samples in total.

Getting to Know the Dataset Class

Before moving on to use the MNIST data for this article, we will first learn a bit more about the PyTorch Dataset class. In the process, we will create a small dummy dataset. This will help us to know some of the important functionalities and how to actually use the DataLoader class along with it.

So, first some important information about the Dataset class. The best thing about the PyTorch library is that we can combine simple Python code with almost any of the classes in the library.

- The

Datasetclass has three important class functions:__init__(): as usual, the starting point where we will initialize everything that we use in the class.__len__(): this returns the length of the dataset. Simply this will return the number of samples in the dataset.__getitem__(): this function returns a sample from the dataset when we provide an index value to it.

All of the above concepts will become clearer once we start with the coding part. Let’s create a small example dataset first so that the above concepts become more concrete.

Small Example Using the Dataset Class

So, first of all, we need to remember that we need to override two of the Dataset class funtion. Those two are __len__() and __getitem__().

In this dummy dataset, we will create a Numpy array and give it as input to the class.

So, let’s write the class code and call it ExampleDataset.

The following block is class code in Python.

'''

We can do amazing things with PyTorch Dataset class. We need to ensure that we are overriding two

of it's functions,

`__len__()`: returns the size of the dataset, that is, total number of samples.

`__getitem__()`: when given an index, returns the data sample correspoding to that index.

'''

import numpy as np

from torch.utils.data import Dataset

class ExampleDataset(Dataset):

def __init__(self, data):

self.data = data

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

return self.data[idx]

Let’s go over the above code block in detail. As usual, we import the required libraries in lines 8 and 10. From line 12 we start our custom ExampleDataset() class. Also, note that we inherit the PyTorch Dataset class which is really important. As inheriting the class will allow us to use all the cool features of Dataset class.

You can see that our custom class has three functions. First going over the __init__() function. It takes data as a parameter which we will pass to it when creating an object of the class. We will get to it shortly. __init__() simply initializes our dataset to be used in the other function.

Next, in __len__() function, we return the length of the Numpy array data. Finally, __getitem__() returns an element from the data corresponding to the index (idx) parameter which we pass an argument as well.

So, let’s see what happens when we give a small Numpy array as the data to the class.

sample_data = np.arange(0, 10)

print('The whole data: ', sample_data)

dataset = ExampleDataset(sample_data)

print('Number of samples in the data: ', len(dataset))

print(dataset[2])

print(dataset[0:5])

Running the code gives the following output.

The whole data: [0 1 2 3 4 5 6 7 8 9] Number of samples in the data: 10 2 [0 1 2 3 4]

First, we create a simple Numpy array with 10 elements (line 1). At line 3 we initialize dataset object of the class and pass the sample_data as an argument. In the last three lines (4 to 6), we print the length of the dataset, the element at index position 2 and the elements from index 0 through 5.

I hope that things are clearer now that you have seen how Dataset class actually works in code. We will get into even more details later in this article.

Creating Datasets and DataLoaders from CSV Files

Taking the concept of creating custom datasets a bit further, we will now create datasets from CSV files. I hope that you have downloaded the MNIST training and test CSV files earlier in this article. If not, you can get them here.

In this section, we will read the data from the CSV files, and create iterable DataLoaders that we can feed into a neural network for training and testing.

As usual, train.csv contains 60000 samples, and test.csv contains 10000 samples. Each sample in the training file contains the pixel values of the digit and the number as the target label.

Many of you must have worked with the MNIST dataset before. So, you will be familiar with the usual steps of loading the data, dividing it into training, and test set, and so on. We will cover all those steps here as well. But our main focus will be to create the custom dataset for iterable data loading.

So, let’s get to work and create our dataset and train a deep learning neural network on that data as well.

Importing the Libraries and Defining Helper Functions

This is the familiar place where we will import the required libraries. We will also define a helper function to fetch the computation device that is available on the system.

import pandas as pd import numpy as np import torch import torchvision import torch.nn as nn import torch.nn.functional as F import torch.optim as optim from torchvision.transforms import transforms from torch.utils.data import DataLoader from torch.utils.data import Dataset

The above are the imports that we need along with this project.

The following code block is to get the computation device. It is a very simple if-else code.

def get_device():

if torch.cuda.is_available():

device = 'cuda:0'

else:

device = 'cpu'

return device

device = get_device()

Load and Prepare the Data

Here, we will load our data. We will also separate the pixel values from the image labels.

# read the data

df_train = pd.read_csv('mnist_train.csv')

df_test = pd.read_csv('mnist_test.csv')

# get the image pixel values and labels

train_labels = df_train.iloc[:, 0]

train_images = df_train.iloc[:, 1:]

test_labels = df_test.iloc[:, 0]

test_images = df_test.iloc[:, 1:]

At lines 2 and 3 we load the data. Be sure the give the CSV file paths according to your requirements. I have assumed that the files are present in the current working directory.

From lines 6 to 9 we get the image pixel values as well as the labels. We do this for both, the training set and the test set.

Define the Image Transforms

Now, we will define the image transforms using torchvision.transforms.

# define transforms

transform = transforms.Compose(

[transforms.ToPILImage(),

transforms.ToTensor(),

transforms.Normalize((0.5, ), (0.5, ))

])

For the image transforms, we convert the data into PIL image, then to PyTorch tensors, and finally, we normalize the image data. All of this will execute in the class that we will write to prepare the dataset.

Prepare the Custom Dataset and DataLoaders

So, this is perhaps the most important section of this tutorial. We will write our custom Dataset class ( MNISTDataset), prepare the dataset and define the dataloaders.

The following code block defines the MNISTDataset class, prepares the custom dataset, and prepares the iterable DataLoaders as well. Take a moment to carefully analyze the code.

# custom dataset

class MNISTDataset(Dataset):

def __init__(self, images, labels=None, transforms=None):

self.X = images

self.y = labels

self.transforms = transforms

def __len__(self):

return (len(self.X))

def __getitem__(self, i):

data = self.X.iloc[i, :]

data = np.asarray(data).astype(np.uint8).reshape(28, 28, 1)

if self.transforms:

data = self.transforms(data)

if self.y is not None:

return (data, self.y[i])

else:

return data

train_data = MNISTDataset(train_images, train_labels, transform)

test_data = MNISTDataset(test_images, test_labels, transform)

# dataloaders

trainloader = DataLoader(train_data, batch_size=128, shuffle=True)

testloader = DataLoader(test_data, batch_size=128, shuffle=True)

In the __init__() function we initialize the images, labels, and transforms. Note that by default the labels and transforms parameters are None. We will pass them as arguments depending on our requirements for the project. And yes, we will override those two parameters in this article.

The __len__() function returns the length as usual.

Most of the work is being done in the __getitem__() (lines 11 to 21) function. So, we get the data on the index by index basis. For each index, we get the pixel data for the entire row. At line 13 we convert the data to Numpy array and reshape it into 28×28 gray-scale images. Lines 15 and 16 apply the transforms to the pixel data based on the transforms that we have defined earlier. Also, we return the pixel data along with the corresponding label at lines 18 – 19.

The next few lines of code are fairly straightforward. We create two objects, train_data and test_data for the MNISTDataset() class. We pass the image pixels, the image labels, and the transforms as arguments. Finally, at lines 27 and 28 we define the trainloader and testloader. You must find this line very similar to the directly getting the dataloader from the PyTorch MNIST dataset.

So, our dataloaders are ready. Next, we are all set to define our neural network and train it.

The next section will consist mainly of code blocks and not much explanation as you must be very familiar with the following parts.

Define the Neural Network, Train, and Test it

Defining a very simple neural network.

# define the neural net class

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(in_channels=1, out_channels=20,

kernel_size=5, stride=1)

self.conv2 = nn.Conv2d(in_channels=20, out_channels=50,

kernel_size=5, stride=1)

self.fc1 = nn.Linear(in_features=800, out_features=500)

self.fc2 = nn.Linear(in_features=500, out_features=10)

def forward(self, x):

x = F.relu(self.conv1(x))

x = F.max_pool2d(x, 2, 2)

x = F.relu(self.conv2(x))

x = F.max_pool2d(x, 2, 2)

x = x.view(x.size(0), -1)

x = F.relu(self.fc1(x))

x = self.fc2(x)

return x

net = Net().to(device)

print(net)

Define the loss function and the optimizer.

# loss criterion = nn.CrossEntropyLoss() # optimizer optimizer = optim.SGD(net.parameters(), lr=0.001, momentum=0.9)

Now, we will define the training and test function and execute the functions as well.

def train(net, trainloader):

for epoch in range(10): # no. of epochs

running_loss = 0

for data in trainloader:

# data pixels and labels to GPU if available

inputs, labels = data[0].to(device, non_blocking=True), data[1].to(device, non_blocking=True)

# set the parameter gradients to zero

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

# propagate the loss backward

loss.backward()

# update the gradients

optimizer.step()

running_loss += loss.item()

print('[Epoch %d] loss: %.3f' %

(epoch + 1, running_loss/len(trainloader)))

print('Done Training')

def test(net, testloader):

correct = 0

total = 0

with torch.no_grad():

for data in testloader:

inputs, labels = data[0].to(device, non_blocking=True), data[1].to(device, non_blocking=True)

outputs = net(inputs)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on test images: %0.3f %%' % (

100 * correct / total))

train(net, trainloader)

test(net, testloader)

The following is the result after training. We are getting fairly good results for such a simple network.

Net( (conv1): Conv2d(1, 20, kernel_size=(5, 5), stride=(1, 1)) (conv2): Conv2d(20, 50, kernel_size=(5, 5), stride=(1, 1)) (fc1): Linear(in_features=800, out_features=500, bias=True) (fc2): Linear(in_features=500, out_features=10, bias=True) ) [Epoch 1] loss: 1.304 [Epoch 2] loss: 0.266 [Epoch 3] loss: 0.173 [Epoch 4] loss: 0.133 [Epoch 5] loss: 0.110 [Epoch 6] loss: 0.095 [Epoch 7] loss: 0.084 [Epoch 8] loss: 0.076 [Epoch 9] loss: 0.069 [Epoch 10] loss: 0.064 Done Training Accuracy of the network on test images: 98.370 %

Summary and Conclusion

I hope that you found this article helpful. You can leave your thoughts in the comment section and I will surely address them.

In case you are new to PyTorch, then you will find my Getting Started with PyTorch series very helpful.

- Part 1: Installing PyTorch and Covering the Basics.

- Part 2: Basics of Autograd in PyTorch.

- Part 3: Basics of Neural Network in PyTorch.

- Part 4: Image Classification using Neural Networks.

If you want, you can reach out to me on LinkedIn, and Twitter.

Excellent stuff. Brother please teach me how to extract 3d features in pytorch of any image/video? Also how can i use pretrained model such as Kinetics 400 using pytorch.

I am glad that you liked the post. Regarding the 3d feature extraction, I will probably post a article in the near future. But before that I have a few other articles lined up. These articles take a long time to prepare and I try my best to keep these error free. So, you may have to wait for a few weeks. In the meantime, you can look up to the other available posts.

I m getting error as too many indexers

Hello Shripad, can you please tell me on which line you are getting the error. Because I ran the code and everything executed as expected. Please point out the code so that I can double-check.

Thank you very much for such a clear guide!

I have one small question though. I am not fully understand why the first FC-layer has 800 input features. According to my understanding it would be rather 20*20*50=20000 (50 channels and 20×20 “images”), which clearly does not work. So, the the first CONV-layer converts images from 28×28 to 24×24. Then the second CONV-layer converts this size further from 24×24 down to 20×20. So I am a bit confused here. Thanks in advance for a clarification!

Oh, I didnt count MaxPool-layers, things make sense now 🙂

I am using a dataset of 50 x 300, with no splitting between testing and training or labels. I get an error with the custom dataset class.

>>> Error: cannot reshape array of size 300 into shape (50,300,1)

def __getitem__(self, i):

data = self.X.iloc[i, :]

data = np.asarray(data).astype(np.uint8).reshape(50, 300, 1)

Is this because it only reads one line of my dataset? How can I expand the iteration over the entire dataset?

Hello Alayt. Can you please check whether your images are greyscale or RGB?

And also check that you have properly flattened your images. That may be the issue here.

I am using this to flatten my image:

real = real.view(cur_batch_size, -1).to(device).

My dataset is are word embeddings (integers) so I’m not sure if they have image colors unless you mean train_data.

Alayt, I am a bit confused. Are you using the code on word embeddings or image data. Because the code that I have written are for images of dimensions 28x28x1.