Object detection is now one of the most practical and industry-altering applications of deep learning and computer vision. For that reason, many learners are interested in learning about the concept. And many deep learning practitioners seek to apply it to solve real-life problems. Because of that, we see a lot of open-source repositories, libraries, and codebases for making object detection training easier. One of them is MMDetection which is part of the OpenMMLab projects. It is a great library for fast and custom object detection training. But sometimes, it can be tricky to set it up properly with GPU support. To make that process simpler, we will check out how to install MMDetection on both Ubuntu and Windows for RTX and GTX GPUs.

Here, we will go through the installation steps in such a manner that they will work on Ubuntu and Windows operating systems. Along with that, we will also cover a broad range of GPUs in the RTX 30 series and GTX 10 series family.

This post is the first part of the Getting Started with MMDetection for Object Detection series.

- Install MMDetection on Ubuntu and Windows for RTX and GTX GPUs

We will cover the following topics in this post:

- Why this post?

- A detailed view of hardware and operating systems that the MMDetection installation will work on.

- Prerequisites to Install MMDetection on Ubuntu and Windows.

- Steps to install MMDetection on Ubuntu and Windows for RTX and GTX GPUs.

- Verifying the installation.

- A few Frequently Asked Questions covering why we did some things in a particular way in this post.

Hopefully, this post will prove helpful to get you up and running with MMDetection as soon as possible.

Why This Post?

Recently, I have been exploring MMDetection for custom object detection and learning about it in depth. But I always face some type of issues when installing MMDetection on different platforms/operating systems. These problems can arise due to:

- Change in operating systems, for example, from Ubuntu to Windows or vice-versa.

- Change in hardware. Different series, or different architectures of GPUs. When moving from GTX to RTX series GPUs.

- Change in globally installed CUDA and cuDNN versions or when CUDA and cuDNN were available only inside certain Python environments.

- Or even from a simple change in the Python environment.

Somehow, I always got to fix it. But then again, it should not be this difficult to set it up on different platforms/hardware/operating systems. And I may not be the only one facing these issues.

For this reason, I tried to create a very simple, streamlined, and single set of instructions that work across different families of GPUs and also on Ubuntu and Windows. This took some time to figure out, but finally, they work and I am sharing them here so that anyone else can use them as well.

Obviously, these instructions may change/become obsolete as newer versions get released or as there are changes in operating systems or hardware. But hopefully, from the date of publication of this post, these instructions will stay relevant for a long period of time.

Hardware and Operating Systems that the Installation Steps Have Been Tested On

The instructions that we will follow to install MMDetection on Ubuntu and Windows have already been tested across a few different hardware and operating systems.

For Ubuntu

These installation instructions were tested on two different machines with Ubuntu 20.04.

Machine 1:

- Operation system: Ubuntu 20.04.

- GPU: GTX 1060.

- Globally installed CUDA version: 11.6

- Globally installed cuDNN version: 8.4.0

Machine 2:

- Operation system: Ubuntu 20.04.

- GPU: RTX 3080.

- Globally installed CUDA version: 11.4

- Globally installed cuDNN version: 8.2.0

Note: The above tests assume that recent versions of CUDA and cuDNN were available on the machines. But may not be mandatory to install them as we will see later on (for Windows installation). You may try the Ubuntu installation without globally installing CUDA and cuDNN. We will not cover the installation of CUDA and cuDNN in this post.

But if you want to install CUDA and cuDNN first, then the official websites are the best places to go.

- NVIDIA CUDA downloads.

- NVIDIA cuDNN downloads. You may require to log in/join.

For Windows

All the testing for Windows was done on two machines which both contained Windows 10.

Machine 1:

- Operation system: Windows 10.

- GPU: GTX 1060.

- No global installation of CUDA or cuDNN. All tests in Anaconda/Miniconda environment.

Machine 2:

- Operation system: Windows 10.

- GPU: RTX 3080.

- No global installation of CUDA or cuDNN. All tests in Anaconda/Miniconda environment.

As you can see from the above Windows 10 points, global installation of CUDA and cuDNN may not be necessary for Ubuntu as well. This shows the broad settings across which the installation instructions that we are going to cover should work.

Prerequisites to Install MMDetection on Ubuntu and Windows

Now, note that all the installations take place in Anaconda/Miniconda environment. It is preferable to install Miniconda before moving forward as it has a lot less overhead for predefined installations and is much faster to set up. We will also follow the creation of the environment in the installation steps. For now, you just need to install it on either Windows/Ubuntu from here.

Other GPUs That The Installation Steps Should Support

Although not tested yet, the installation steps should also work with the following GPUs:

- GTX 1070, GTX 1070 Ti, GTX 1080, GTX 1080 Ti.

- RTX 3060, RTX 3060 Ti, RTX 3070, RTX 3070 Ti, RTX 3080 Ti, RTX 3090.

Steps to Install MMDetection on Ubuntu and Windows for RTX and GTX GPUs

To install MMDetection correctly, or as a matter of fact, any library from the OpenMMLab family, we need to ensure that we install the MMCV library correctly. MMCV is the foundational library for all the computer vision libraries/toolboxes in the OpenMMLab family. Once we install that correctly, installing any other library, be it MMDetection, or any other toolbox from the family is pretty easy. And we need to install MMCV to use any of the toolboxes from the OpenMMLab family. Most of the errors happen while installing MMCV. So, here, we will focus on installing MMCV and MMDetection.

Installation Steps

Let’s get down to the important parts of the post now. That is, installing MMCV, and setting up MMDetection for Ubuntu and Windows for RTX and GTX GPUs.

Note that all the steps are the same for both Ubuntu and Windows.

1. Creating Conda Environment and Activating It

The first step is to create an Anaconda/Miniconda (conda) environment and activate it for all the further instructions.

conda create -n mmcv python=3.9

Here, the environment name is mmcv and we are installing Python 3.9 with it.

Please note that, according to the tests, Python 3.9 is the highest version that we can choose so that the steps can be common across the different GPUs and operating systems.

Now, activate the environment.

conda activate mmcv

2. Installing PyTorch with CUDA Support using Conda

One important thing to note here. While creating these steps and testing everything, PyTorch 1.11.0 was the highest version available. Using the conda command, it came with cudatoolkit 11.3 support. If at the time of your reading, there is a newer version of PyTorch available with a newer version of cudatoolkit using the conda command, please go with that.

Now, in case you want PyTorch 1.11.0 with cudatoolkit 11.3 (in case a newer version is available) so as not to change any commands further on. You can do so, by choosing to install previous versions of PyTorch which has a list and commands of all the previous versions.

conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch

At the time of writing this, it installed PyTorch 1.11.0 with cudatoolkit 11.3 in the mmcv environment.

3. Install MMCV Full According to the PyTorch and CUDA Versions

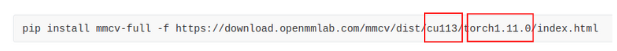

This part is pretty important. The next command assumes that the PyTorch version is 1.11.0 and cudatoolkit version in the environment is 11.3. Also, the following command will always install the latest stable version of MMCV that is available.

pip install mmcv-full -f https://download.openmmlab.com/mmcv/dist/cu113/torch1.11.0/index.html

In the above command, cu113 specifies that we have cudatoolkit 11.3 in the environment. Similarly, torch1.11.0 specifies that our PyTorch version is 1.11.0. These have to match the correct version, else MMCV will not get installed with the correct CUDA/GPU support.

Suppose that you have a newer version of PyTorch and cudatoolkit in the environment. In that case, you need to change the numbers marked in the red rectangles in the following figure.

4. Install MMDetection

Hopefully, the above installation was successful. If so, we are all set to install MMDetection now. This is pretty straightforward. We just need to install the latest version of MMDetection library according to the MMCV library. But we don’t have to specify any version here.

pip install mmdet

That’s it. If this installation happens successfully, all the difficult parts are complete.

Installing a Few Other Dependencies

The installation of all the major libraries is now complete. But there are a few more dependencies that we need to take care of. These will ensure error-free inference and training, and also take care of a few version issues.

Installing TensorBoard

The MMCV/MMDetection libraries use TensorBoard for logging. So, we need to install that. This is also important if you wish to run any of the demo training notebooks.

pip install tensorboard

Installing OpenCV Python

This one is also quite important as MMCV uses OpenCV for a lot of visualizations and other computer vision functionalities internally.

pip install opencv-python

Installing the Correct Version of Protobuf

There is a very high chance that during the above installation steps, the latest version of Protobuf was installed. But that will throw the following error either during the import of mmdet, or mmcv, or when starting training.

... TypeError: Descriptors cannot not be created directly. If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0. If you cannot immediately regenerate your protos, some other possible workarounds are: 1. Downgrade the protobuf package to 3.20.x or lower. 2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower). More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates

To get around the above error, we need to install the latest stable version that will work with the other libraries. As of writing this, Protobuf 3.20.1 was the version that worked in a stable manner.

pip install protobuf==3.20.1

This concludes all the steps that we need to install MMCV and MMDetection correctly on both Ubuntu and Windows for RTX and GTX GPUs.

Verifying the Installation

Although we are done with the installation steps, there is one important part left. Checking that everything is working correctly. That is ensuring, that we can import mmcv, mmdet and that the libraries detect cudatoolkit for GPU utilization.

For, that open your terminal/command line, activate the mmcv environment, and enter the Python prompt (>>>) by typing python. Next, execute the following commands.

>>> import mmdet >>> import mmcv >>> mmcv.collect_env()

The mmcv.collect_env() should give slightly different outputs according to the different environments where it is installed. Let’s check each of them.

Ubuntu

Now, we discussed earlier that, our Ubuntu + GTX 1060 system had CUDA 11.6 and cuDNN 8.4.0 globally. So, output for that was the following.

{'sys.platform': 'linux', 'Python': '3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0]', 'CUDA available': True, 'GPU 0': 'NVIDIA GeForce GTX 1060', 'CUDA_HOME': '/usr/local/cuda-11.6', 'NVCC': 'Cuda compilation tools, release 11.6, V11.6.55', 'GCC': 'gcc (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0', 'PyTorch': '1.11.0', 'PyTorch compiling details': 'PyTorch built with:\n - GCC 7.3\n - C++ Version: 201402\n - Intel(R) oneAPI Math Kernel Library Version 2021.4-Product Build 20210904 for Intel(R) 64 architecture applications\n - Intel(R) MKL-DNN v2.5.2 (Git Hash a9302535553c73243c632ad3c4c80beec3d19a1e)\n - OpenMP 201511 (a.k.a. OpenMP 4.5)\n - LAPACK is enabled (usually provided by MKL)\n - NNPACK is enabled\n - CPU capability usage: AVX2\n - CUDA Runtime 11.3\n - NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37\n - CuDNN 8.2\n - Magma 2.5.2\n - Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.2.0, CXX_COMPILER=/opt/rh/devtoolset-7/root/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF, \n', 'TorchVision': '0.12.0', 'OpenCV': '4.5.5', 'MMCV': '1.5.1', 'MMCV Compiler': 'GCC 7.3', 'MMCV CUDA Compiler': '11.3'}

The 'CUDA_HOME': '/usr/local/cuda-11.6' ensures that it detects the CUDA version. But it has been compiled with version 11.3 which is ensured by the last line, 'MMCV CUDA Compiler': '11.3'. This also ensures that MMCV is able to detect CUDA/GPU.

The Ubuntu + RTX 3080 system had CUDA 11.4 and cuDNN 8.2.0 globally. The output was the following for that system.

{'sys.platform': 'linux', 'Python': '3.9.12 (main, Apr 5 2022, 06:56:58) [GCC 7.5.0]', 'CUDA available': True, 'GPU 0': 'NVIDIA GeForce RTX 3080', 'CUDA_HOME': '/usr/local/cuda', 'NVCC': 'Cuda compilation tools, release 11.4, V11.4.48', 'GCC': 'gcc (Ubuntu 9.4.0-1ubuntu1~20.04.1) 9.4.0', 'PyTorch': '1.11.0', 'PyTorch compiling details': 'PyTorch built with:\n - GCC 7.3\n - C++ Version: 201402\n - Intel(R) oneAPI Math Kernel Library Version 2021.4-Product Build 20210904 for Intel(R) 64 architecture applications\n - Intel(R) MKL-DNN v2.5.2 (Git Hash a9302535553c73243c632ad3c4c80beec3d19a1e)\n - OpenMP 201511 (a.k.a. OpenMP 4.5)\n - LAPACK is enabled (usually provided by MKL)\n - NNPACK is enabled\n - CPU capability usage: AVX2\n - CUDA Runtime 11.3\n - NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37\n - CuDNN 8.2\n - Magma 2.5.2\n - Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.2.0, CXX_COMPILER=/opt/rh/devtoolset-7/root/usr/bin/c++, CXX_FLAGS= -Wno-deprecated -fvisibility-inlines-hidden -DUSE_PTHREADPOOL -fopenmp -DNDEBUG -DUSE_KINETO -DUSE_FBGEMM -DUSE_QNNPACK -DUSE_PYTORCH_QNNPACK -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO -O2 -fPIC -Wno-narrowing -Wall -Wextra -Werror=return-type -Wno-missing-field-initializers -Wno-type-limits -Wno-array-bounds -Wno-unknown-pragmas -Wno-sign-compare -Wno-unused-parameter -Wno-unused-function -Wno-unused-result -Wno-unused-local-typedefs -Wno-strict-overflow -Wno-strict-aliasing -Wno-error=deprecated-declarations -Wno-stringop-overflow -Wno-psabi -Wno-error=pedantic -Wno-error=redundant-decls -Wno-error=old-style-cast -fdiagnostics-color=always -faligned-new -Wno-unused-but-set-variable -Wno-maybe-uninitialized -fno-math-errno -fno-trapping-math -Werror=format -Wno-stringop-overflow, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=ON, USE_NNPACK=ON, USE_OPENMP=ON, USE_ROCM=OFF, \n', 'TorchVision': '0.12.0', 'OpenCV': '4.5.5', 'MMCV': '1.5.1', 'MMCV Compiler': 'GCC 7.3', 'MMCV CUDA Compiler': '11.3'}

Windows

Neither of the Windows systems had CUDA or cuDNN globally installed.

The following is the output for Windows + GTX 1060 system.

{'sys.platform': 'win32', 'Python': '3.9.12 (main, Apr 4 2022, 05:22:27) [MSC v.1916 64 bit (AMD64)]', 'CUDA available': True, 'GPU 0': 'NVIDIA GeForce GTX 1060', 'CUDA_HOME': None, 'MSVC': 'Microsoft (R) C/C++ Optimizing Compiler Version 19.16.27030.1 for x64', 'GCC': 'n/a', 'PyTorch': '1.11.0', 'PyTorch compiling details': 'PyTorch built with:\n - C++ Version: 199711\n - MSVC 192829337\n - Intel(R) Math Kernel Library Version 2020.0.2 Product Build 20200624 for Intel(R) 64 architecture applications\n - Intel(R) MKL-DNN v2.5.2 (Git Hash a9302535553c73243c632ad3c4c80beec3d19a1e)\n - OpenMP 2019\n - LAPACK is enabled (usually provided by MKL)\n - CPU capability usage: AVX2\n - CUDA Runtime 11.3\n - NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37\n - CuDNN 8.2\n - Magma 2.5.4\n - Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.2.0, CXX_COMPILER=C:/cb/pytorch_1000000000000/work/tmp_bin/sccache-cl.exe, CXX_FLAGS=/DWIN32 /D_WINDOWS /GR /EHsc /w /bigobj -DUSE_PTHREADPOOL -openmp:experimental -IC:/cb/pytorch_1000000000000/work/mkl/include -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOCUPTI -DUSE_FBGEMM -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=OFF, USE_NNPACK=OFF, USE_OPENMP=ON, USE_ROCM=OFF, \n', 'TorchVision': '0.12.0', 'OpenCV': '4.5.5', 'MMCV': '1.5.2', 'MMCV Compiler': 'MSVC 192930140', 'MMCV CUDA Compiler': '11.3'}

Here, 'CUDA_HOME': None tells us that no global installation of CUDA was detected. But 'MMCV CUDA Compiler': '11.3' also tells us that MMCV was correctly compiled with cudatoolkit 11.3 that was installed with PyTorch using the conda command.

We get similar output for the Windows + RTX 3080 system as well.

{'sys.platform': 'win32', 'Python': '3.9.12 (main, Apr 4 2022, 05:22:27) [MSC v.1916 64 bit (AMD64)]', 'CUDA available': True, 'GPU 0': 'NVIDIA GeForce RTX 3080', 'CUDA_HOME': None, 'MSVC': 'Microsoft (R) C/C++ Optimizing Compiler Version 19.16.27045 for x64', 'GCC': 'n/a', 'PyTorch': '1.11.0', 'PyTorch compiling details': 'PyTorch built with:\n - C++ Version: 199711\n - MSVC 192829337\n - Intel(R) Math Kernel Library Version 2020.0.2 Product Build 20200624 for Intel(R) 64 architecture applications\n - Intel(R) MKL-DNN v2.5.2 (Git Hash a9302535553c73243c632ad3c4c80beec3d19a1e)\n - OpenMP 2019\n - LAPACK is enabled (usually provided by MKL)\n - CPU capability usage: AVX2\n - CUDA Runtime 11.3\n - NVCC architecture flags: -gencode;arch=compute_37,code=sm_37;-gencode;arch=compute_50,code=sm_50;-gencode;arch=compute_60,code=sm_60;-gencode;arch=compute_61,code=sm_61;-gencode;arch=compute_70,code=sm_70;-gencode;arch=compute_75,code=sm_75;-gencode;arch=compute_80,code=sm_80;-gencode;arch=compute_86,code=sm_86;-gencode;arch=compute_37,code=compute_37\n - CuDNN 8.2\n - Magma 2.5.4\n - Build settings: BLAS_INFO=mkl, BUILD_TYPE=Release, CUDA_VERSION=11.3, CUDNN_VERSION=8.2.0, CXX_COMPILER=C:/cb/pytorch_1000000000000/work/tmp_bin/sccache-cl.exe, CXX_FLAGS=/DWIN32 /D_WINDOWS /GR /EHsc /w /bigobj -DUSE_PTHREADPOOL -openmp:experimental -IC:/cb/pytorch_1000000000000/work/mkl/include -DNDEBUG -DUSE_KINETO -DLIBKINETO_NOCUPTI -DUSE_FBGEMM -DUSE_XNNPACK -DSYMBOLICATE_MOBILE_DEBUG_HANDLE -DEDGE_PROFILER_USE_KINETO, LAPACK_INFO=mkl, PERF_WITH_AVX=1, PERF_WITH_AVX2=1, PERF_WITH_AVX512=1, TORCH_VERSION=1.11.0, USE_CUDA=ON, USE_CUDNN=ON, USE_EXCEPTION_PTR=1, USE_GFLAGS=OFF, USE_GLOG=OFF, USE_MKL=ON, USE_MKLDNN=OFF, USE_MPI=OFF, USE_NCCL=OFF, USE_NNPACK=OFF, USE_OPENMP=ON, USE_ROCM=OFF, \n', 'TorchVision': '0.12.0', 'OpenCV': '4.5.5', 'MMCV': '1.5.2', 'MMCV Compiler': 'MSVC 192930140', 'MMCV CUDA Compiler': '11.3'}

Hopefully, everything worked for you as well. If you faced any errors please let me know in the comment section. I will try my best to help.

FAQs (Why We Did Some Things as The Way We Did)

Here are a few FAQs to get around some of the decisions that we made.

Q. Why did we not use pip install torch and pip install torchvision on the Ubuntu systems if we had CUDA and cuDNN installed globally?

A. These two commands work completely fine on GTX GPUs but had issues with the RTX GPUs (at least with the RTX 30 series GPUs). Although the installation on the RTX 30 series GPU will work fine, there is a very high chance that there will be issues when you try to move tensors to the CUDA device.

Q. Why not use specific versions of MMCV according to the official docs?

A. Although we can do that, it is always better to use the latest versions. As our installations were working correctly with the latest versions, so, we will stick with them so as to get the latest updates and functionalities of the library.

Q. Will the above installation steps work with other GTX and RTX GPUs?

A. As mentioned earlier, although the steps were not tested for other versions of GTX 10 series and RTX 30 series GPUs, they should work perfectly fine as they have the same architecture (Pascal and Ampere respectively).

Summary and Conclusion

In this post, we went through the steps to install MMDetection on Ubuntu and Windows for RTX and GTX GPUs. In future posts, we will cover inference and custom training using MMDetection. Hopefully, this post helped you to install the MMCV and MMDetection libraries correctly.

If you have any doubts, thoughts, or suggestions, please leave them in the comment section. I will surely address them.

You can contact me using the Contact section. You can also find me on LinkedIn, and Twitter.

4 thoughts on “Install MMDetection on Ubuntu and Windows for RTX and GTX GPUs”