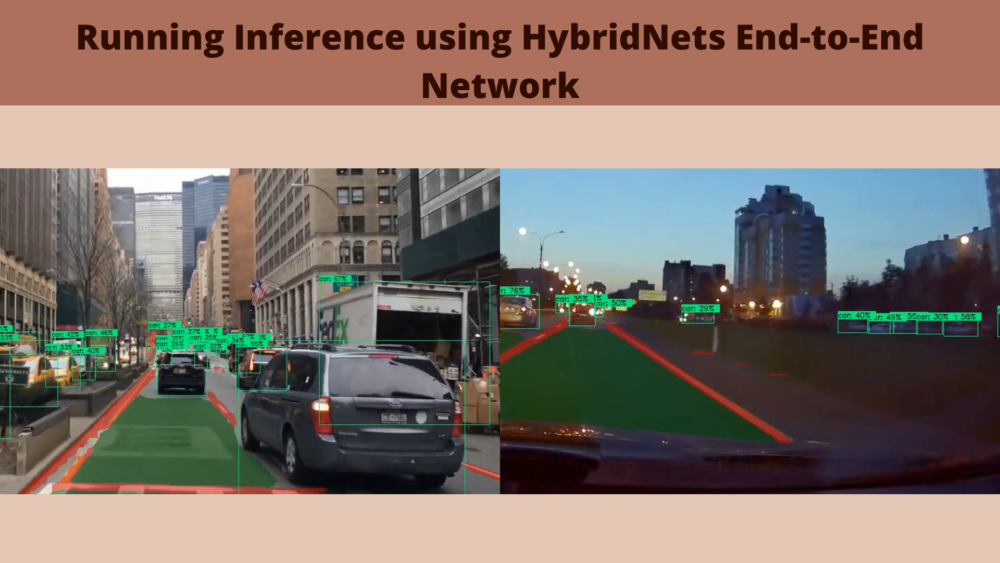

We discussed the HybridNets end-to-end perception network in the last two blog posts. The HybridNets neural networks can do both, traffic object detection and semantic segmentation in real-time. It is mainly meant to solve end-to-end vision perception tasks for autonomous driving. This blog post will carry out comprehensive inference tests using the HybridNets neural network. We will test the HybridNets neural network under different scenarios and weather conditions to test its capability.

If you want to take a look at the previous posts, you may visit these links:

Before moving into the technical details of the post, let’s check out the points that we will cover here.

- We will start by discussing the types of inference that we will carry out.

- Then we will move on to set up the directory structure for carrying out the inference using HybridNets neural network.

- Next, we will run the inference using HybridNets using GPU and discuss the results in detail.

- We will also run a final inference on the CPU to check out how fast HybridNets is on the CPU.

This is going to be a practical post with minimal theory. If you want to get into the details of the HybridNets neural networks, please visit the previous posts. Mostly, we will deal with the inference Python script.

Different Types of Inference to Carry Out using HybridNets

In this blog post, we will carry out 5 different inference experiments. All of these will be in different weather and lighting conditions. All of the videos will be dashcam videos when driving cars.

We will start with a daytime video without any rain but with a lot of traffic.

Then we will run inference on a video in the evening setting but with clear weather. This will give us an idea of how the HybridNets model performs in low-lighting conditions.

The next one will be a video during rainy weather and in the daytime. This will be a difficult one as most of the roads and cars will not be visible.

Then, we will use the most challenging video among all these. This will be a nighttime video in rainy weather. This will really be a difficult inference video for the HybridNets model.

The final test will be to check performance on different hardware. Instead of a GPU, we will use a CPU.

All these inference experiments should give us a proper picture of how efficiently the HybridNets model works under different scenarios. Also, as we will be testing on a CPU, we will get an idea of the forward pass speed of the model on mid-range hardware (we will discuss the hardware used for the experiments a bit later).

Please note that originally, the HybridNets model has been trained on the BDD100K dataset. Sometimes, it may not work well when the inference data is very different from what it has been trained on. This is only from my personal experience and from the experiments that were carried out.

Directory Structure of the Project

The following is the directory structure for this project.

├── custom_inference_script

│ └── video_inference.py

├── HybridNets

│ ├── demo

│ ├── demo_result

│ ├── encoders

│ ├── hybridnets

│ ├── images

│ ├── projects

│ ├── __pycache__

│ ├── tutorial

│ ├── utils

│ ├── weights

│ ├── backbone.py

│ ├── hubconf.py

│ ├── hybridnets_test.py

│ ├── hybridnets_test_videos.py

│ ├── LICENSE

│ ├── requirements.txt

│ ├── train_ddp.py

│ ├── train.py

│ ├── val_ddp.py

│ ├── val.py

│ └── video_inference.py

└── input

└── videos

- The

custom_inference_scriptdirectory contains the custom script that we will for running inference. - We will copy the above-mentioned script to the

HybridNetsdirectory which is the cloned HybridNets repository. We will execute all the code from within theHybridNetsdirectory. - The

input/videosdirectory contains all the videos that we will run inference on.

When downloading the zip file for this post, you will get access to the custom inference script. You will need to copy this script into the cloned HybridNets repository. The input/videos directory will contain one of the videos from this post. The other three videos are taken from YouTube, the credits of which can you find at the end of the post.

Setting Up Local System for HybridNets Inference

You can find all the details of setting the local system in this post under the Creating a New Conda Environment heading.

The executable custom inference script will be provided with the downloadable files in this post.

The HybridNets Inference Script

The Python script that we use in this post for video inference is largely adapted from the script that we can find in the repository.

There are a few changes in the code that we will use. They are:

- Fixing the color for bounding boxes for traffic detection (green color).

- Using the red color for lane line detection/segmentation.

- Using green color for drivable area segmentation.

- We can provide the path to a single video file instead of providing the path to an entire directory while executing the script.

- Calculating the forward pass FPS and annotating it on the output frame as well.

- A simple change in the saving format of the output files. When the GPU is used for inference, the save file name will be appended with

_gpuat the end when the CPU is used for inference, then_cpuwill be appended.

With these pointers in mind, let’s start running inference on videos using HybridNets.

Inference using HybridNets

First, we will run inference on all 4 videos using GPU, and the final inference experiment will be using the CPU.

Note: All the inference experiments and results shown in this post have been run on a laptop with 6 GB GTX 1060 GPU, i7 10th generation CPU, and 16 GB of RAM. Your FPS may vary depending on the hardware that you use.

All the code executions will be made within the HybridNets directory.

Only the first video is included in the downloadable zip file for this post. The other three videos are taken from YouTube. You can find the links to all videos at the end of this post.

Inference on Video with Clear Weather During Daytime

Let’s start with a clear weather video in the daytime. This is a simple scenario, although the video contains a lot of traffic which is occluded as well.

Download Code

Execute the following command in the terminal.

python video_inference.py --source ../input/videos/video_1.mp4 --load_weights weights/hybridnets.pth --cuda True

In the above command, --cuda True ensures that we use the GPU for inference. It is also True by default.

Let’s check out what we have in the terminal for the first video.

DETECTED SEGMENTATION MODE FROM WEIGHT AND PROJECT FILE: multiclass video: ../input/videos/video_1.mp4 frame: 1030 second: 169.82274889945984 fps: 12.065147376749807

It is running at 12 FPS which is not bad considering that we are using a laptop GPU. Also, the model is doing both, segmentation and detection. In fact, all the videos run between 11 FPS to 12 FPS on this particular hardware. As we also annotate the FPS on the video frames, we will not need to check the terminal output each time.

The model is performing quite well here. It is able to detect almost all vehicles, even the ones which are partially occluded. It is getting confused for the drivable area segmentation at the crossings. But the lane detection is working very well.

Inference on Video in Clear Weather and Evening Time

This is going to be a slightly challenging video. It is during the evening time with low-lighting conditions. Let’s execute the script.

python video_inference.py --source ../input/videos/video_2.mp4 --load_weights weights/hybridnets.pth --cuda True

The following is the output.

Mostly, it is working exceptionally well. Even in low-lighting conditions, the drivable area and lane-line segmentation are almost perfect. There are false detections for the traffic objects in a few places. Then again, we can attribute some of these to the low-lighting.

Inference on Video in Rainy Weather in Daytime

Now, we have a video with rainy weather during the daytime.

python video_inference.py --source ../input/videos/video_3.mp4 --load_weights weights/hybridnets.pth --cuda True

This is a very challenging video, so, let’s see how the model performs here.

The HybridNets model is not performing very well here. It is completely missing one of the lane lines and is unable to detect some of the cars on the other side of the road. Also, it is detecting some of the buildings as cars. Looks like the model performs a bit poorly in real-world rainy conditions.

Inference on Video in Rainy Weather at Night

This is going to be perhaps the most challenging scenario for the HybridNets model. It is a video with rainy weather at night.

python video_inference.py --source ../input/videos/video_4.mp4 --load_weights weights/hybridnets.pth --cuda True

The following is the output.

The limitations of the model are quite visible here. It is unable to detect the lane lines with good accuracy here. The drivable area segmentation is also not that accurate. Moreover, it is detecting a few of the trees and signboards as vehicles.

Looks like, the HybridNets model’s weakness is rainy weather and low-light conditions. But most probably, we can overcome these by training it on more such images.

Inference on CPU

For one final experiment, let’s run inference on the CPU.

python video_inference.py --source ../input/videos/video_1.mp4 --load_weights weights/hybridnets.pth --cuda False

We need to use the --cuda False flag to use the CPU instead of the GPU. The following is the output on the terminal.

DETECTED SEGMENTATION MODE FROM WEIGHT AND PROJECT FILE: multiclass video: ../input/videos/video_1.mp4 frame: 1030 second: 431.19740629196167 fps: 2.3886971140605424

It is running just over 2 FPS on a laptop with i7 8th generation CPU. It is not very bad considering that many models run even slower than this even when just doing object detection. The following is the output video. The detections remain the same.

Summary and Conclusion

In this blog post, we ran many inference experiments using the HybridNets end-to-end vision perception model. We used videos with various weather and lighting conditions. This gave us a good idea of the capability of the HybridNets model and what we can do to improve it. I hope that this post was helpful to you.

If you have any doubts, thoughts, or suggestions, please leave them in the comment section. I will surely address them.

You can contact me using the Contact section. You can also find me on LinkedIn, and Twitter.

Video Credits

video_1.mp4: https://www.youtube.com/watch?v=7HaJArMDKgIvideo_2.mp4: https://www.youtube.com/watch?v=nfE11L7CxAQ

video_3.mp4: Video by Joshua Miranda: https://www.pexels.com/video/vehicles-traveling-on-expressway-while-raining-5004303/.video_4.mp4: https://www.youtube.com/watch?v=iBqjG7EI3Ts

I am trying to fine tune it with addional class “rider” but getting a circular import error (from hybridnets.dataset import BddDataset). Can you help me in this regard how to resolve the issue?

Please write a blog as to how to re-trained YOLOP or Hybridnet with addional class if possible

Hello Muhammad Adil. I will surely put that on my plate. Can you please let me know which dataset are you using?

BDD100k

Ok. But I think, the model has already been trained on BDD100K. Still, I will try to put up a custom training post.