Training Deep Neural Networks is time-consuming and difficult. Batch Normalization is a technique that can help to speed up the training of neural networks. In this article, you will get to know what Batch Normalization is and how it helps in training of deep neural networks.

Difficulty in Training Deep Neural Networks

Training very deep neural networks requires a considerable amount of hardware support (GPUs) and time as well. And furthermore, the problems posed by vanishing gradients due to backpropagation makes the job even more difficult. All in all, training deep neural networks is difficult.

So, if we break down the above reasons, we can say that the constant update of weights in neural networks is what makes the training difficult.

Batch Normalization

When faced with the vanishing gradients problem, Xavier Initialization and He Initialization can help a lot. But many times they only work as temporary solutions as the problem returns further into the training.

This is where Batch Normalization (proposed by Sergey Ioffe and Christian Szegedy) can help. The main problem is that during training, the input of each layer changes as the parameters in the previous layer keep changing.

The changing parameters slow down training by a great factor. Sergey Ioffe and Christian Szegedy called this the internal covariate shift.

According to Sergey Ioffe and Christian Szegedy as proposed in their paper Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift –

… distribution of each layer’s inputs changes during training, as the parameters of the previous layers change. This slows down the training by requiring lower learning rates and careful parameter initialization, and makes it notoriously hard to train models with saturating nonlinearities. We refer to this phenomenon as internal covariate shift …

How Does Batch Normalization Help?

Batch Normalization calculates the mean and standard deviation of the inputs in mini-batches. Then these values are used to standardize the inputs and update the parameters in each layer. This is the process of zero- centering and normalizing the inputs.

The process is used during the training of neural networks and the standardized values are applied to the input layers and subsequent hidden layers.

In the above process, two new parameters for scaling and shifting of the layers are also used. The shifting parameter is called Beta and the scaling parameter is called Gamma.

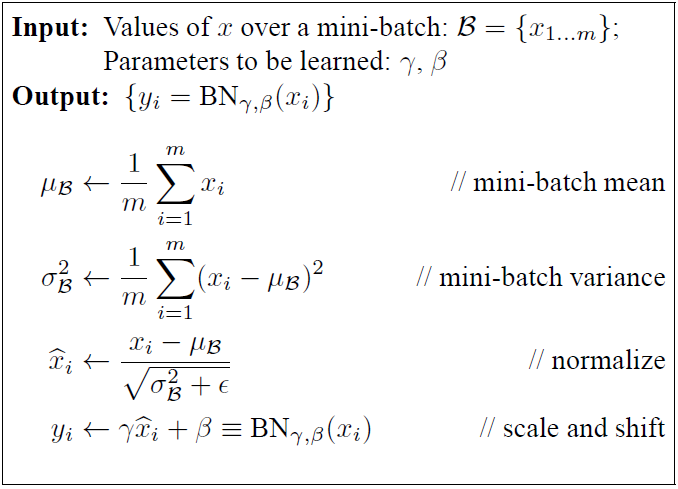

The following equations from the Batch Normalization paper may help you understand the scaling and shifting in a better way:

The first equation is for calculating the mean of the mini-batches. After calculating the mean, the second equation is for calculating the variance. And the third equation is for normalizing the inputs. Till this step, the zero-centering and normalization of the inputs are complete. In the final equation, the two new parameters (Beta and Gamma) are shifting and scaling respectively.

The Benefits

The authors mentioned many real benefits in the paper that we will be getting when using Batch Normalization while training neural networks.

Batch Normalization makes the network more resilient to vanishing and exploding gradients:

In the paper, the authors mention that the problem remains checked even when using higher learning rates which can easily lead to scaling of layer parameters. This causes a problem during the backpropagation of the gradients. But Batch Normalization helps in checking this issue.

More stable network training even when using saturating functions:

The experiments also reveal that the network’s training remained stable with Batch Normalization even when using sigmoid activation functions.

State of the art results with image classification:

When applied to Image Classification, Batch Normalization is able to achieve state of the art results (the likes of Inception model) with 14% less training.

No need for Dropout:

In the paper (section 4.2.1), the authors mention that it can also fulfill some of the goals of Dropout. Dropout is a really important procedure to reduce overfitting. But it looks like Batch Normalization eliminates the need to use Dropout while being able to reduce overfitting.

For In-Depth Reading

- The Original Batch Normalization Paper

- Understanding the difficulty of training deep feedforward neural networks (Xavier Glorot and Yoshua Bengio)

Conclusion

In this article, you got to know about Batch Normalization and its benefits while training deep neural networks. You should surely check out the above resources if you want to get into the mathematical details.

Leave your thoughts in the comment section. If you gained something from the article, then like and share it with others and subscribe to the newsletter. You can follow me on Twitter and Facebook to get regular updates. I am on LinkedIn as well.

2 thoughts on “Neural Networks: Speed Up Training with Batch Normalization”