Autotrain is a no-code platform from Hugging Face to train, evaluate, and deploy machine learning and deep learning models. In this article, we will use Hugging Face Autotrain to train a Small Language Model (SLM).

The Hugging Face Autotrain platform offers several functionalities for training:

- Computer Vision models

- Machine Learning models

- And LMs & LLMs

However, in this article we will focus on training a language model for instruction following using the Autotrain platform.

Although we can directly access Autotrain from their platform, we will use local installation. So, in way, we will use some code rather than the no-code approach.

We will cover the following topics in this article

- First, we will install the Hugging Face Autotrain library.

- Then we will go through a simple codebase to train the GPT2 Large model.

- We will use the Open Assistant Guanaco dataset for training the GPT2 model.

- After installation, we will use the trained model (pushed to the Hugging Face hub) and run inference.

Prerequisites for using Autotrain to Train LLMs

There are a few prerequisites to use the Autotrain platform/API.

First, we need a Hugging Face account as we will push the model to the hub. If you do not have one, you can create one by visiting the Hugging Face website.

We also need an access token with read/write permission. You can create a new token by going to Settings => Access Tokens and clicking New Token.

Installing Hugging Face Autotrain

We can install the Autotrain library by simply executing the following command on local CLI or Kaggle or Colab notebooks.

pip install autotrain-advanced

OR

!pip install autotrain-advanced

That’s all the setup that we need.

Project Directory Structure

Following is the project directory structure.

├── autotrain_gpt2_large_openassistant_guanaco.ipynb └── inference.ipynb

We have just two notebooks, one for training and another for inference.

The article comes with a downloadable zip file with training and an inference notebook. You can simply run the training notebook locally or on Kaggle and/or Colab just by changing the user credentials and API token.

The notebooks also install the library so there are no dependency issues while running on cloud platforms.

Download Code

The Open Assistant Guanaco Dataset

The Open Assistant Guanaco is an instruction-tuning dataset. It is typically used to train LLMs to follow instructions. It is a smaller version of the larger Open Assistant dataset.

To know more, I highly recommend going through the Instruction Tuning OPT-125M article.

Training GPT2 Large Model using Hugging Face Autotrain

Let’s get to the coding part where we train the GPT2 model using Autotrain.

The training code is present in autotrain_gpt2_large_openassistant_guanaco.ipynb. The first three code cells install the library, update the packages if needed, and show the command line arguments available for LLM training.

Installation:

!pip install -U autotrain-advanced

Update packages:

!autotrain setup

The above command updates any packages if necessary. For example, in my case, it updated the xformers library.

Show LLM Training commands:

!autotrain llm --help

It will output several commands. However, we will get into the details of those that we will use while training.

Executing the Training Command

Next is the training command. It is quite simple to start the training. However, we need to be careful while passing the command line arguments.

!autotrain llm --train \ --project-name "gpt2-large-open-assistant-guanaco" \ --model "openai-community/gpt2-large" \ --data-path "timdettmers/openassistant-guanaco" \ --lr 0.0002 \ --batch-size 2 \ --epochs 1 \ --text_column "text" \ --token YOUR_HF_TOKEN \ --push-to-hub \ --save_total_limit 1 \ --train-split "train" \ --valid-split "test" \ --repo-id "USERNAME/REPO_NAME" \ --trainer sft

As we discussed earlier, Autotrain supports several training tasks. Here, we are interested in running LLM training, so, the command starts with autotrain llm. Following are the important command line arguments from above:

--train: This tells the command to run in training mode. There are also--deployand--inferenceoptions.--model: This is the model tag from the Hugging Face model page. We are using the GPT2 Large model here.--data-path: We can either pass a local data path, like CSV, JSONL, or text file or use one from Hugging Face. It is the Open Assistant Guanaco model tag from Hugging Face here.--text-column: Most instruction tuning datasets have a column containing the entire chat/conversation. For the dataset that we are using, it is thetextcolumn.--token: This is the Hugging Face token you can find in Settings => Access Tokens. Be sure to give the token both read and write access.--push-to-hub: This tells the command to push the model weights and tokenizer to the Hugging Face hub after training.--train-splitand--valid-split: There is a training and test split in the dataset. If we provide the--valid-split, then it will be used for evaluation.--repo-id: This is the repository name where we want our trained model to be pushed. It should be the Username/A_Unique_Repo_Name. You can replace it with your user name and the name that you want for the repository.--trainer: We can either use the generic trainer (default) or the Supervised Fine-Tuning Trainer (SFT). We are using the latter here which is better for instruction tuning the GPT2 model.

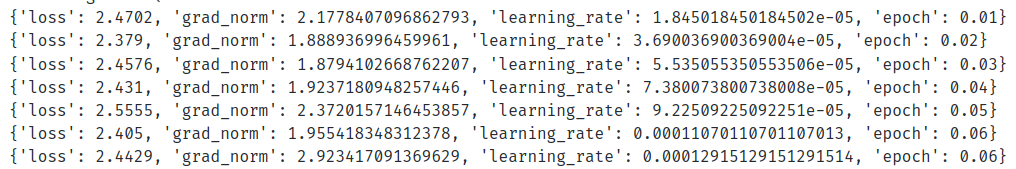

That’s it. The training will start automatically and we get the logs every 25 steps by default.

After training, the model will be pushed to the hub. For example, for the above run, we can find the model here. This has been made public so that others can also use it and loading it will not require Hugging Face credentials.

Inference using the Autotrain Model Pushed to Hub

We will use the above model that has been pushed to the hub for inference. The code for inference resides in the inference.ipynb notebook. The inference code here is almost similar to the previous two articles. Do give them a read to know more about instruction tuning language models on different datasets:

First, we need to import all the necessary libraries and modules.

from transformers import (

AutoModelForCausalLM,

logging,

pipeline,

AutoTokenizer

)

import torch

Second, set the computation device, and load the model & the tokenizer.

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = AutoModelForCausalLM.from_pretrained('sovitrath/gpt2_large_openassistant_guanaco_qlora')

tokenizer = AutoTokenizer.from_pretrained('sovitrath/gpt2_large_openassistant_guanaco_qlora')

The above model and tokenizer are getting loaded from the Hugging Face hub which has been made public.

Third, initialize the text generation pipeline.

pipe = pipeline(

task='text-generation',

model=model,

tokenizer=tokenizer,

max_length=256, # Prompt + new tokens to generate.

device_map=device

)

Fourth, define a prompt template and format it with the user prompt.

template = '### Human: {}### Assistant:'

prompt = 'Write a few tips for staying healthy.'

prompt = template.format(prompt)

We use the same template as per the training dataset.

Finally, pass the prompt through the pipeline.

outputs = pipe(

prompt,

do_sample=True,

temperature=0.7,

top_k=10,

top_p=0.95,

repetition_penalty=1.1

)

print(outputs[0]['generated_text'])

Here is the response that we get (truncated manually).

### Human: Write Python code to add two numbers.### Assistant: Here's an example Python script that adds two numbers:

```python

def add(a, b):

return a + b

```

This script uses the `add` function to perform addition on two numbers. The `a` and `b` arguments specify the input values for the addition.

The `b` argument specifies the index of the first number...

As we can see the GPT2 large model is able to write a Python function for adding two numbers successfully. In addition to that, it also adds a small description. However, after that, it starts to hallucinate. It is expected because we trained the model for just one epoch. Furthermore, there are better below 1 billion parameter models out there right now which will surpass GPT2 with 1 epoch of training. We will try those models in future articles.

Further Reading

- Transformer Neural Network

- Text Generation with Transformers

- Character Level Text Generation using LSTM

- Word Level Text Generation using LSTM

Summary and Conclusion

In this short and concise article, we used the Hugging Face Autotrain pipeline and workflow. Starting from the installation, training of the GPT2 Large model, and running inference, we covered each part succinctly. I hope this article was useful to you.

If you have any doubts, thoughts, or suggestions, please leave them in the comment section. I will surely address them.

You can contact me using the Contact section. You can also find me on LinkedIn, and X.

3 thoughts on “Hugging Face Autotrain – Getting Started”