In this article, we are fine tuning the Phi 1.5 model using QLoRA on the Stanford Alpaca dataset with Hugging Face Transformers. ...

Fine Tuning Phi 1.5 using QLoRA

In this article, we are fine tuning the Phi 1.5 model using QLoRA on the Stanford Alpaca dataset with Hugging Face Transformers. ...

In this article, we use the Hugging Face Autotrain no code platform to train the GPT2 Large model for following instructions. ...

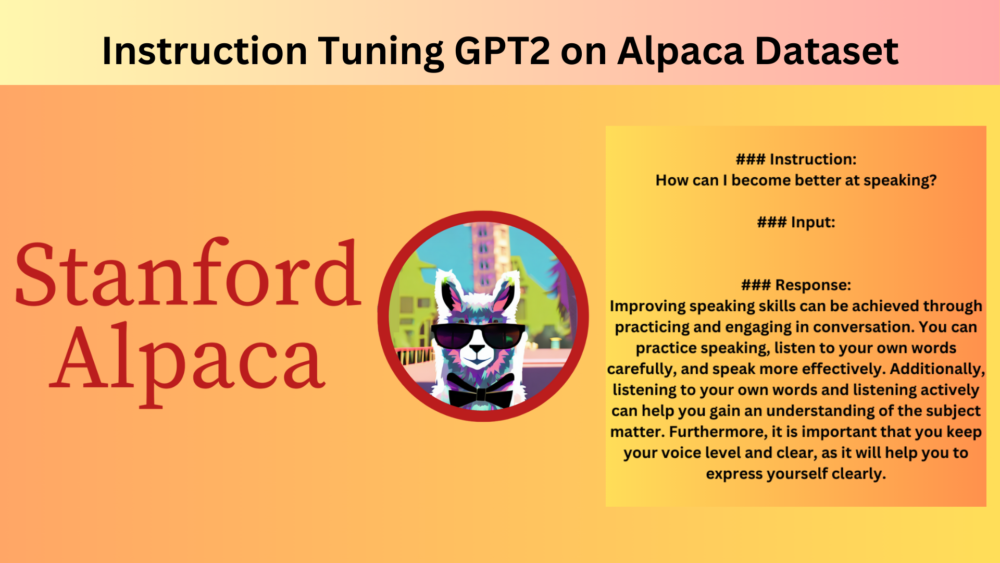

In this article, we are instruction tuning the GPT2 Base model on the Alpaca dataset. We use the Hugging Face Transformers library along with the SFT Trainer Pipeline for this. ...

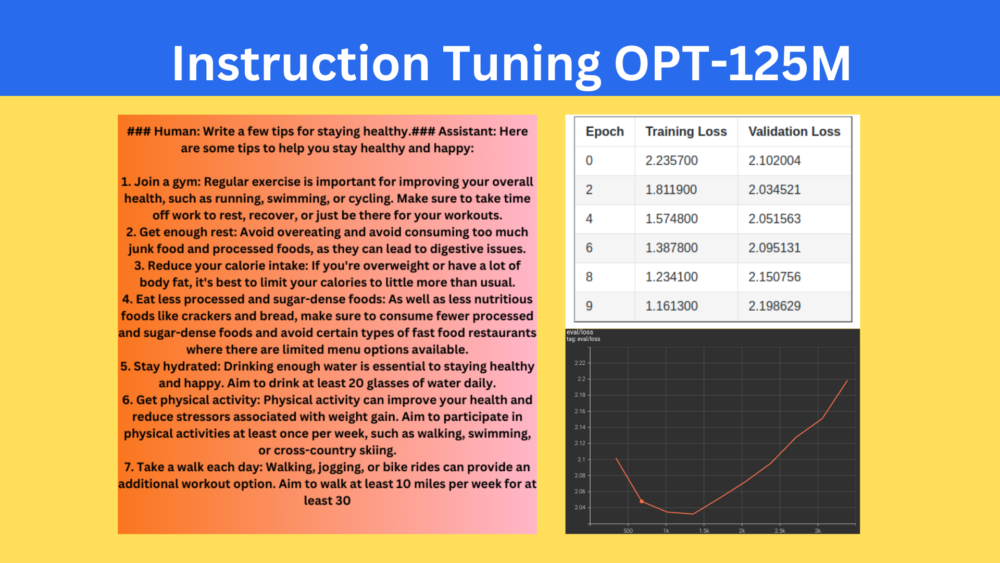

In this article, we carry out instruction tuning of the OPT-125M model by training it on the Open Assistant Guanaco dataset using the Hugging Face Transformers library. ...

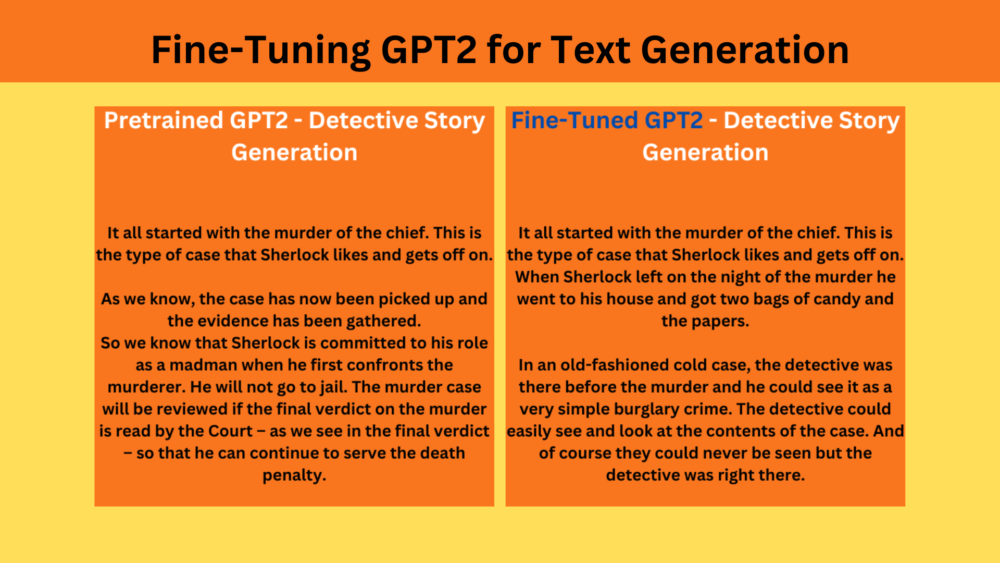

In this article, we train the DistilGPT2 model for detective story generation. We use the Hugging Face Transformers library to fine-tune the model on Arthur Conan Doyle's collection of Sherlock Holmes stories. ...

Business WordPress Theme copyright 2025