In this article, we use the Hugging Face Autotrain no code platform to train the GPT2 Large model for following instructions. ...

Hugging Face Autotrain – Getting Started

In this article, we use the Hugging Face Autotrain no code platform to train the GPT2 Large model for following instructions. ...

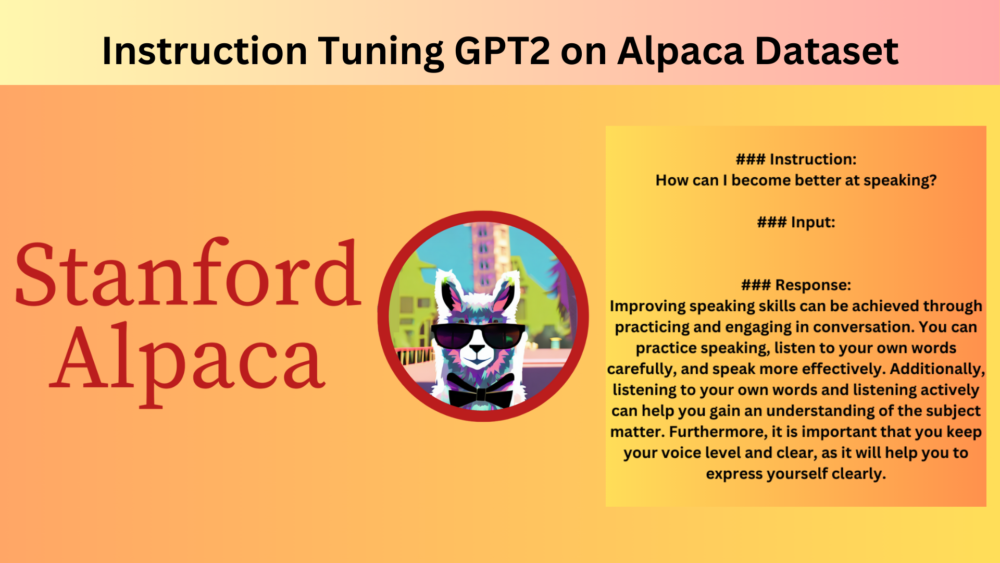

In this article, we are instruction tuning the GPT2 Base model on the Alpaca dataset. We use the Hugging Face Transformers library along with the SFT Trainer Pipeline for this. ...

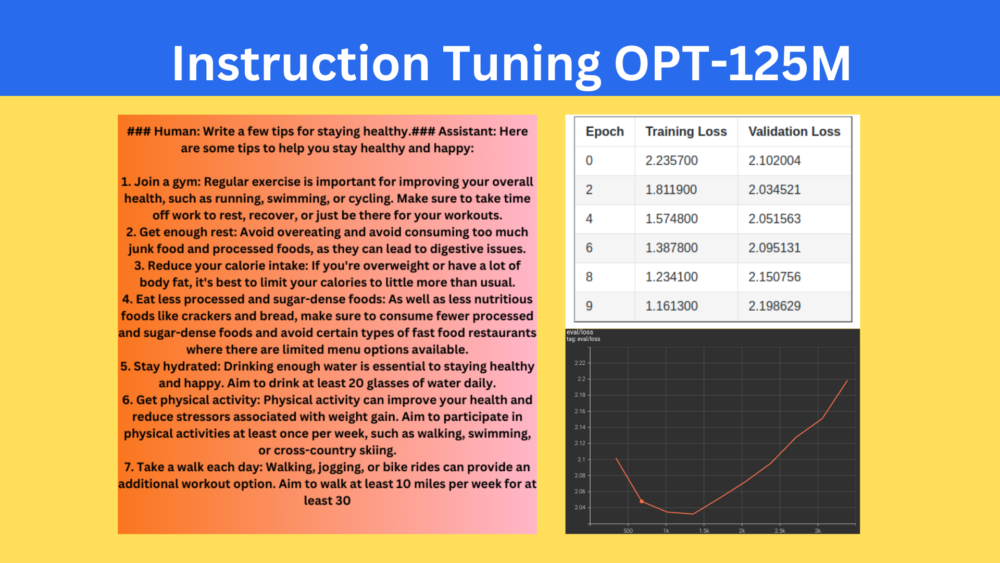

In this article, we carry out instruction tuning of the OPT-125M model by training it on the Open Assistant Guanaco dataset using the Hugging Face Transformers library. ...

Business WordPress Theme copyright 2025