Face detection has been improving at a great pace over the past few years. And deep learning models help speed up the improvement in the field of face detection to a great extent. Due to this, there are many state-of-the-art deep learning libraries present today. Dlib is one of them. Therefore, in this post, we will carry out Face Detection with Dlib using CNN (Convolutional Neural Networks).

In the previous post, we covered face detection with Dlib using HOG and Linear SVM. Do check out that post if you want to get a high level overview of the Dlib library and also carry out face detection using traditional machine learning models.

This post is a continuation, where we will use deep learning models for face detection. We will use a deep learning based CNN (Convolutional Neural Network) face detector to detect faces in images and videos.

So, what are we going to cover in this article?

- What were some of the drawbacks of Dlib HOG and Linear SVM detector?

- A brief about the deep learning face detector that we will use here.

- Going through the directory structure and input files.

- Detecting faces in images and videos using the CNN detector.

- What are some of the benefits and drawbacks of the deep learning based face detector?

Drawbacks of the Dlib HOG and Linear SVM Face Detector

While using Dlib’s HOG + Linear SVM model for face detection, we found some issues. Although the detector seemed to perform on faces which were bigger and front facing. It suffered when the orientation of the face changed, had any coverings, or if the face appeared very small in the image/video frame.

Moreover, it was using the traditional machine learning technique for face detection. So, it was not possible to benefit from current hardware like GPUs which almost all deep learning models can benefit from.

The above issues might seem trivial in most cases but can become serious problems when trying to build a really good face detection application.

The Dlib CNN Face Detector

In this post, we will move over from the traditional machine learning based face detector. We will use a CNN model for face detection. It is a pre-trained model that we will load while executing our Python scripts.

One of the major benefits of this detector is that it can use the computational power of our GPUs, if one is available. This can make the detection pipeline a bit faster and take off the load from the CPU which can then focus on other tasks. If a GPU is not present, then by default, the model will be using the CPU for all the processing necessary.

Now, for this, we also need a pre-trained face detection model. You can find it in the official Dlib models GitHub repository of Davis King.

Note: If you have already downloaded the source code files for this post, then you skip downloading the model manually. The source folder also contains all the input files that we will need for this post, including the CNN model.

It is the mmod_human_face_detector.dat.bz2 file. You can also directly download the model by clicking on the following link:

You can then extract the .bz2 file which will give you the actual model, that is mmod_human_face_detector.dat.

To carry out face detection with Dlib using the CNN model, we need to call a specific function. Dlib provides the cnn_face_detection_model_v1() function to load the initialize the CNN based detector. We need to pass the path to the mmod_human_face_detector.dat file as the argument to this function.

Directory Structure and Input Files

Let’s take a look at the directory structure for this project.

├── input │ ├── image_1.jpg │ ├── image_2.jpg │ └── video_1.mp4 ├── outputs │ ├── image_1_u1.jpg │ ... ├── face_det_image.py ├── face_det_video.py ├── mmod_human_face_detector.dat ├── mmod_human_face_detector.dat.bz2 ├── process_dlib_boxes.py

- The

inputfolder contains the images and videos in which we will detect the faces using the Dlib CNN face detector. - The

outputsfolder will contain all the results of face detection after we run the Python scripts. - Then we have three Python files for which we will write the code a bit further on into the post.

- And finally, we have the

mmod_human_face_detector.datfile. This is the CNN model that detects faces.

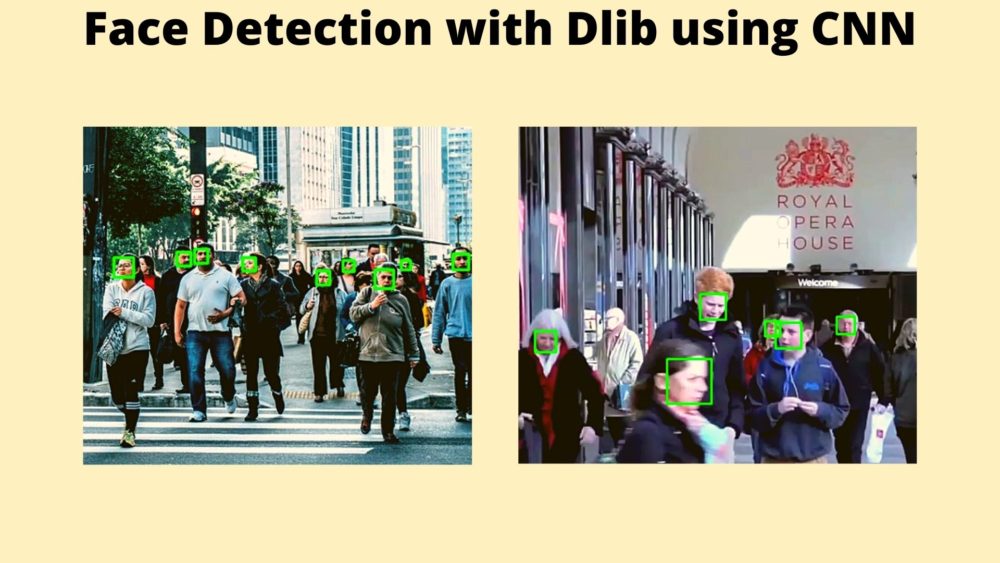

Just to get an idea, the following figure shows the two images from the input folder in which we will detect faces.

We are done with all the preliminary stuff here.

Face Detection with Dlib using CNN

The coding part of this post and analyzing the results will be really interesting. There are three Python files in which we need to write the code. We will start with a simple utility function.

Utility Function to Process Dlib Bounding Boxes

This is going to be a very simple function what will provide us with the bounding box coordinates that Dlib outputs when it detects a face.

We discussed the bounding box output format of Dlib in some detail in the previous post. If you are directly reading this, then please take a look at the previous post.

We will write this code in the process_dlib_boxes.py file.

def process_boxes(box):

xmin = box.rect.left()

ymin = box.rect.top()

xmax = box.rect.right()

ymax = box.rect.bottom()

return [int(xmin), int(ymin), int(xmax), int(ymax)]

The process_boxes() function accepts one bounding box as the input parameter after the Dlib face detection happens. It then extracts top left and bottom right coordinates. Finally, it returns the coordinates in a list format.

Face Detection with Dlib using CNN in Images

In this section, we will write the code to detect faces in images. In a later part of the post, we will also detect faces in videos.

This code will go into the face_det_image.py Python script. Let’s start with all the import statements and constructing the argument parser.

import dlib

import argparse

import cv2

import time

import process_dlib_boxes

# contruct the argument parser

parser = argparse.ArgumentParser()

parser.add_argument('-i', '--input', default='input/image_1.jpg',

help='path to the input image')

parser.add_argument('-u', '--upsample', default=None, type=int,

help='factor by which to upsample the image, default None, ' + \

'pass 1, 2, 3, ...')

args = vars(parser.parse_args())

- At line 1, we are import

dlib. - We need the cv2 module to read and process images.

- At line 6, we are also importing our own module, which is

process_dlib_boxes.

For, the argument parser, we have two flags.

--inputaccepts the path to the input image.--upsamplewill accept an integer that indicates by how much to upsample the image (make it bigger). It will just make everything bigger in the image that can help detect even very smaller and far-off faces. Note that by default the--upsamplevalue will beNone.

Read the Image and Load the CNN Detector

The next block of code reads the image, converts it into RGB format, and initializes the Dlib CNN face detector.

# read the image and convert to RGB color format

image = cv2.imread(args['input'])

image_rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# path for saving the result image

save_name = f"outputs/{args['input'].split('/')[-1].split('.')[0]}_u{args['upsample']}.jpg"

# initilaize the Dlib face detector

detector = dlib.cnn_face_detection_model_v1('mmod_human_face_detector.dat')

At line 20, we are also initializing a save_name variable. Using this name we will save the resulting image to disk. We are appending the upsample value with this string so that subsequent runs with different upsample values do not overwrite the previous results. Then at line 23, we initialize the detector variable by loading the face detection model.

Detect the Faces

The following block contains the code to detect faces in images.

if args['upsample'] == None:

start = time.time()

detected_boxes = detector(image_rgb)

end = time.time()

elif args['upsample'] > 0:

start = time.time()

detected_boxes = detector(image_rgb, int(args['upsample']))

end = time.time()

- The first case is when the

--upsamplevalue isNone(default value). In that case we just pass the RGB image to thedetectorwithout any other argument. - If the

--upsamplevalue is greater than 0, then we pass that value along with the RGB image as the argument.

If you have an Nvidia GPU in your system, then the Dlib’s CNN detector will automatically use it to speed up the detection pipeline. Else, it will use your CPU for all the processing. In almost all cases, the GPU processing time will be much less than the CPU.

There is also another thing to take care of here. If we provide a very high value for the --upsample flag, then the detector will need a huge amount of GPU memory and might crash due to low memory availability. We will see one such case while running the script.

Draw the Bounding Boxes and Show the Results

After the detection, we need to draw the bounding boxes around the detected faces.

# process the detection boxes and draw them around faces

for box in detected_boxes:

res_box = process_dlib_boxes.process_boxes(box)

cv2.rectangle(image, (res_box[0], res_box[1]),

(res_box[2], res_box[3]), (0, 255, 0),

2)

cv2.imshow('Result', image)

cv2.waitKey(0)

cv2.imwrite(save_name, image)

print(f"Total faces detected: {len(detected_boxes)}")

print(f"Total time taken: {end-start:.3f} seconds.")

print(f"FPS: {1/(end-start):.3f}")

Starting from line 33, for each box in detected_boxes:

- We extract the top-left and bottom-right coordinates of the bounding box by calling the

process_boxes()function. - Then we are drawing the rectangle around detected face.

Finally, we are showing the result in a new OpenCV window, saving the result to disk. Along with that we are also printing the total time taken, and total number of faces detected on the terminal window.

With this, we have completed all the code needed for face detection in images.

Execute face_det_image.py to Detect Faces in Images

Let’s execute the face_det_image.py script and see how well the CNN face detector performs.

We have two images in the input folder. Let’s start with the first image without any upsampling.

python face_det_image.py -i input/image_1.jpg

You should see output similar to the following in the terminal window.

Total faces detected: 0 Total time taken: 0.801 seconds. FPS: 1.248

Astonishingly, no faces were detected. There is no point in visualizing the output image in this case.

Will upsampling the image help? Let’s try with upsample value of 1.

python face_det_image.py -i input/image_1.jpg -u 1

Total faces detected: 5 Total time taken: 1.321 seconds. FPS: 0.757

Interestingly, all 5 faces were detected. But the total time taken for the computation also increased. The following figure shows the output.

Clearly, the CNN model was able to detect all the 5 faces.

Now, let’s throw a bit challenging image at the detector, that is the second image.

python face_det_image.py -i input/image_2.jpg

Total faces detected: 0 Total time taken: 0.878 seconds. FPS: 1.139

Again no faces detected without upsampling.

python face_det_image.py -i input/image_2.jpg -u 1

Total faces detected: 0 Total time taken: 1.287 seconds. FPS: 0.777

Even with upsampling value of 1, the CNN model as not able to detect any faces. Looks like the faces are a bit too small in the image.

python face_det_image.py -i input/image_2.jpg -u 2

Total faces detected: 5 Total time taken: 3.247 seconds. FPS: 0.308

Okay, with an upsampling value of 2, the model is able to detect 5 faces. But it took more than 3 seconds to process the entire image! Not at all real-time.

What about upsampling the image even more.

python face_det_image.py -i input/image_2.jpg -u 3

Total faces detected: 11 Total time taken: 11.902 seconds. FPS: 0.084

This time, the model was able to detect 11 faces but tool almost 12 seconds to process the image.

We can see in figure 4 that still all the faces have not been detected in the image. Let’s try detecting those by upsampling the image even more.

python face_det_image.py -i input/image_2.jpg -u 4

And this is the output.

RuntimeError: Error while calling cudnnFindConvolutionForwardAlgorithm( context(), descriptor(data), (const cudnnFilterDescriptor_t)filter_handle, (const cudnnConvolutionDescriptor_t)conv_handle, descriptor(dest_desc), num_possible_algorithms, &num_algorithms, perf_results.data()) in file /tmp/pip-install-mtngfqwt/dlib_e6e37bb256834042b62a451c24b2cebc/dlib/cuda/cudnn_dlibapi.cpp:812. code: 2, reason: CUDA Resources could not be allocated.

The CUDA device ran out of memory and resources could not be allocated. This was run on a GTX 1060 GPU with 6 GB of video memory. If you have a better GPU with higher memory, then you may have luck running with an upsampling value of 4 or even more. For now, we have to do with upsampling value of 3 only.

Detecting Faces with Dlib using CNN in Videos

In the above section, we covered face detection in images with Dlib using CNN. Now, it’s time that we dive into face detection in videos and see how the CNN model performs.

We will write the code in face_det_video.py file.

Most of the steps are going to be similar to as were in face detection in images. Starting with importing the required modules and constructing the argument parser.

import dlib

import argparse

import cv2

import time

import process_dlib_boxes

# contruct the argument parser

parser = argparse.ArgumentParser()

parser.add_argument('-i', '--input', default='input/video_1.mp4',

help='path to the input image')

parser.add_argument('-u', '--upsample', default=None, type=int,

help='factor by which to upsample the image, default None, ' + \

'pass 1, 2, 3, ...')

args = vars(parser.parse_args())

The import statements are exactly the same as they were in the case of face detection in images. For the command line arguments part, the only difference is that --input will accept the path to a video file instead of an image.

Initialize the CNN Detector and Read the Video File

# initilaize the Dlib CNN face detector

detector = dlib.cnn_face_detection_model_v1('mmod_human_face_detector.dat')

# capture the video

cap = cv2.VideoCapture(args['input'])

if (cap.isOpened() == False):

print('Error opening video file. Please check file path...')

# get the frame width and height

frame_width = int(cap.get(3))

frame_height = int(cap.get(4))

# file name for saving the resulting video

save_name = f"{args['input'].split('/')[-1].split('.')[0]}_u{args['upsample']}"

# define codec and create VideoWriter object

out = cv2.VideoWriter(f"outputs/{save_name}.mp4",

cv2.VideoWriter_fourcc(*'mp4v'), 30,

(frame_width, frame_height))

# for counting the total number of frames

frame_count = 0

# to keep track of the total frames per second

total_fps = 0

- In line 17, we are loading the CNN face detector.

- Then we are reading the video file at line 20.

- Lines 26 and 27 capture the video frames’ width and height. We will need these to properly initialize the

VideoWriter()object for saving the resulting frames. - After that, we initialize the

save_namevariable and create theVideoWriter()object to save the resulting video frames with the detected faces in them. - Line 37 initializes the

frame_countvariable. This is to keep track of the total number of frames that we have iterated through in the video. And line 39 initializes thetotal_fpswhich will keep track of the cumulative FPS (Frames Per Second) for each of the iterated frames.

Iterate Through the Video Frames and Detect Faces

We will simply loop over all the video frames using a while loop. And for each frame, we will detect the faces present in it. Let’s take a look at the following block of code.

while(cap.isOpened()):

# capture each frame of the video

ret, frame = cap.read()

if ret:

image_rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# detect the faces in frame

if args['upsample'] == None:

start = time.time()

detected_boxes = detector(image_rgb)

end = time.time()

elif args['upsample'] > 0:

start = time.time()

detected_boxes = detector(image_rgb, int(args['upsample']))

end = time.time()

# get the current fps

fps = 1 / (end - start)

# add `fps` to `total_fps`

total_fps += fps

# increment frame count

frame_count += 1

# draw the boxes on the original frame

for box in detected_boxes:

res_box = process_dlib_boxes.process_boxes(box)

cv2.rectangle(frame, (res_box[0], res_box[1]),

(res_box[2], res_box[3]), (0, 255, 0),

2)

# put the fps text on the current frame

cv2.putText(frame, f"{fps:.3f} FPS", (15, 30), cv2.FONT_HERSHEY_SIMPLEX,

1, (0, 255, 0), 2)

cv2.imshow('Result', frame)

out.write(frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

break

else:

break

First, we convert the image to RGB color format for each of the current frames and detect the faces according to the upsampling value (lines 44 to 54). Then we calculate the fps for the current frame’s detection, add it to total_fps, and increment the frame_count.

Starting from line 64, we draw the bounding boxes around the detected faces. Line 71 puts the FPS text on the current frame. Then we show the result on the screen and write the frame to disk as well.

The final few steps are releasing the VideoCapture() object, destroying all OpenCV windows, and printing the average FPS on the terminal window.

# release VideoCapture()

cap.release()

# close all frames and video windows

cv2.destroyAllWindows()

# calculate and print the average FPS

avg_fps = total_fps / frame_count

print(f"Average FPS: {avg_fps:.3f}")

With the above block, we complete the code for face detection in videos using Dlib’s CNN model.

Execute face_det_video.py to Detect Faces in Videos

We have all the code ready with us. Now, we just need to execute the script to detect faces in videos.

First, let’s try without any upsampling.

python face_det_video.py -i input/video_1.mp4

Average FPS: 52.891

We get almost 53 FPS on average without providing any upsampling value for the detection pipeline. The following clip shows the output.

Only two faces were detected in the whole video and that even when they were very close to the camera.

What about an upsampling value of 1?

python face_det_video.py -i input/video_1.mp4 -u 1

Average FPS: 10.914

This time, the average FPS dropped by more than 42 points. That is a huge drop. Do we get the results in terms of face detection in exchange for this huge drop in FPS?

This time the results are better. The two faces which were detected only when they were closer to the camera are now being detected when they are far off as well. Also, a few more faces are being detect as well.

What about upsampling with a value of 3?

python face_det_video.py -i input/video_1.mp4 -u 2

Average FPS: 2.634

We get just 2.634 FPS on average and that too on a GPU. Be careful while running with a very high upsampling value on a CPU, which might freeze the script/program.

These are the best results till now. A lot of faces are being detected even at the back which are small. But it is not at all real-time. We are getting just 2.6 FPS.

A Few Takeaways

- Using Dlib’s CNN face detector, we are able to run the detection pipeline on the GPU which reduces the load on the CPU.

- Faces that are very far off, or occluded, or even have different orientations are being detected. This was not the case with Dlib’s HOG and Linear SVM face detector.

- But using a very high upsampling value still possesses the problem of increasing the detection pipeline time by a huge margin.

Summary and Conclusion

In this article, we learned about face detection with Dlib using the CNN face detector. We saw how it improves over the traditional HOG + Linear SVM detector. We also covered some of the drawbacks like low FPS when upsampling the image. I hope that you learned something new from this post.

If you have any doubts, thoughts, or suggestions, then please leave them in the comment section. I will surely address them

You can contact me using the Contact section. You can also find me on LinkedIn, and X.