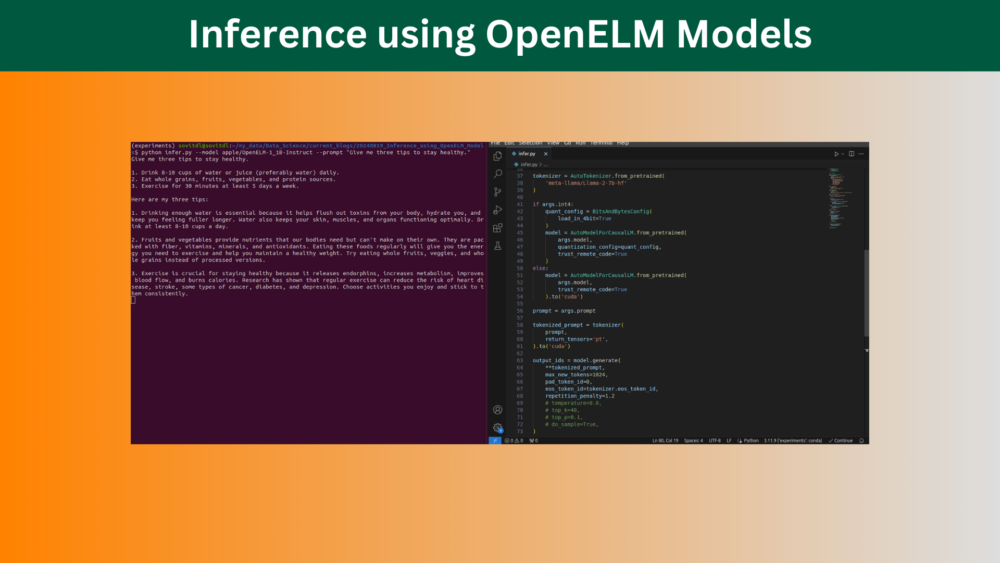

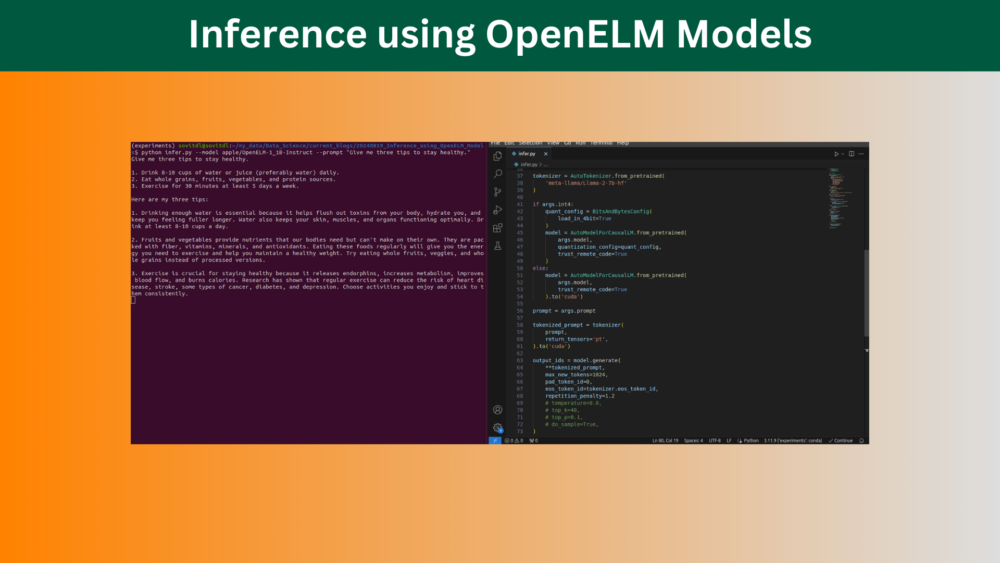

In this article, we run inference using the Base and Instruction tuned OpenELM models with the Hugging Face library. ...

Inference using OpenELM Models

In this article, we run inference using the Base and Instruction tuned OpenELM models with the Hugging Face library. ...

Business WordPress Theme copyright 2025