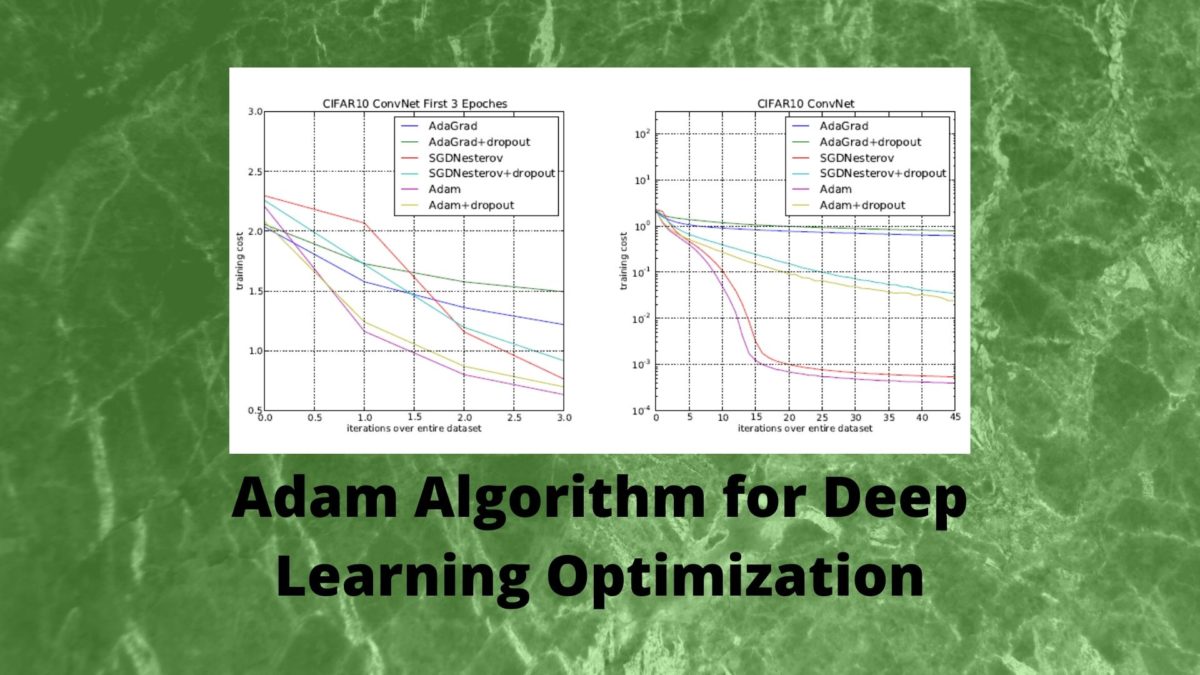

In this tutorial, you will learn how to set up small experimentation and compare the Adam optimizer and the SGD optimizer (Stochastic Gradient Descent) optimizers for deep learning optimization. Specifically, you will learn how to use Adam for deep learning optimization. For a successful deep learning project, the optimization algorithm plays a crucial role. Stochastic […] ...

Adam Optimizer for Deep Learning Optimization