What are agents? Hugging Face puts it quite succinctly – “AI Agents are programs where LLM outputs control the workflow.” However, the ambiguous term here is LLM. Today LLMs control the workflow, and we call these “programs” agents, but this will probably change. Perhaps there is no clear answer even as of 2025. Nor are we going to answer the question in this article. This article has one simple aim. To get the readers started with the Hugging Face smolagents library. And along the way, break down what is happening under the hood that leads to the use of the term agents.

The term LLM Agents has been thrown around for around 2 years now. We started with function calling, moved up to tool use (just a more sophisticated way of function calling), and now are at agents. LLM Agents, simply put, use a combination of function calling and tool use in a multi-step manner to achieve what the user wants.

What are we going to cover in this article through smolagents?

- What is smolagents by Hugging Face?

- Why do we need smolagents?

- What can smolagents do?

- What happens when we ask an agent to:

- Generate an image.

- Search the internet.

- Find information in a web page and answer a specific question.

Note: We will neither look at any source code nor write any sophisticated code ourselves. This very first article will unravel the steps being taken by an LLM to achieve what an agent should through the smolagents library.

What is smolagents by Hugging Face?

Simply put, smolagents is a library by Hugging Face to build LLM agents and agentic workflows.

Why Do We Need smolagents?

The library lets us connect function calling, tool use, and LLMs in a streamlined manner for a seamless workflow. We can use any LLM from Hugging Face to build our agents. Furthermore, it supports the HfAPI using which each Hugging Face user can access up to 1000 calls per day for free.

What Can Smolagents Do and How Does it Work?

By default, smolagents works on top of Code Agents? This means instead of using JSON for function calling the LLMs will write their own code to execute the agentic workflow.

This has proven to be a better method in many cases as LLMs are already quite good at coding. So, given a tool’s function definition (let’s say in Python), the LLMs will write the code to call it with the correct arguments to accomplish the user query.

This will make more sense when we go through the various examples further in the article.

The Project Directory Structure

Let’s take a look at how the project directory is structured.

├── calculator.ipynb ├── combine_tool.ipynb ├── image_generation.ipynb ├── llama_3_2_test.ipynb ├── requirements.txt └── web_search.ipynb

- We have 5 different notebooks. Four of them are smolagents workflow notebooks that we will cover in their respective sections.

- The

llama_3_2_test.ipynbJupyter Notebook is used to test the Llama 3.2 3B model locally without any agentic workflow. This can come in handy when trying to compare results with and without agentic workflow. - The

requirements.txtfile contains the libraries that we need for running the code in this article.

You can download all the notebooks via the download section.

Download Code

Installing Dependencies

We can install all the libraries via the requirements file that includes smolagents, transformers, and Hugging Face Hub.

pip install -r requirements.txt

That’s it. Let’s jump into the coding part without any delay.

Using smolagents for Agentic Workflows

We will cover 4 different use cases here, starting from a simple example of image generation to combining tools.

Image Generation using smolagents

The first use case is simple: we will generate an image or ask an LLM to generate an image.

The code for this is present in the image_generation.ipynb Jupyter Notebook.

First, the import statements.

from smolagents import load_tool, CodeAgent, HfApiModel

We are importing one function and two classes here:

load_tool: We can use this function to load any custom or Hugging Face hosted tool. The code later will clarify this.CodeAgent: This class initializes a code agent instance. A code agent will write Python code to use the tool that we load viaload_tool.HfApiModel: This is perhaps the best part. We can load any LLM from Hugging Face using the serverlessHfApiModelclass. This means that the model will be executed on the Hugging Face hosted hardware instead of locally. Be it a Llama 3B model or Qwen 72B model. As of writing this, each user gets 1000 API calls each day which is enough to conduct small-scale experiments. You can check your remaining calls for the day by clicking on your user icon on Hugging Face and going to Inference API.

Loading an Image Generation Tool

As we are going to generate an image, the next step is loading an image generation tool. We will use a tool already hosted in Hugging Face spaces.

# Image generation tool.

image_gen_tool = load_tool('m-ric/text-to-image', trust_remote_code=True)

Executing the above cell gives the following output:

TOOL CODE:

from smolagents import Tool

from huggingface_hub import InferenceClient

class TextToImageTool(Tool):

description = "This tool creates an image according to a prompt, which is a text description."

name = "image_generator"

inputs = {"prompt": {"type": "string", "description": "The image generator prompt. Don't hesitate to add details in the prompt to make the image look better, like 'high-res, photorealistic', etc."}}

output_type = "image"

model_sdxl = "black-forest-labs/FLUX.1-schnell"

client = InferenceClient(model_sdxl)

def forward(self, prompt):

return self.client.text_to_image(prompt)

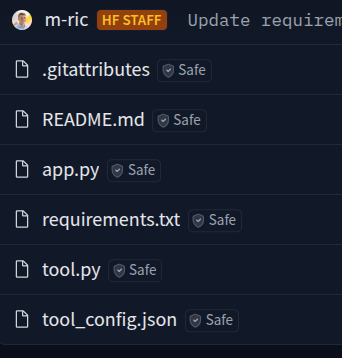

So, how does the above work? The above is a Hugging Face space by m-ric. If you visit the above tool space, you can directly generate images via a Gradio interface. However, the real magic is in the code files.

We have three Python files: app.py, tool.py, tool_config.py.

The primary code resides in the tool.py file. This contains a class to generate the image and is a subclass of the Tool class.

The app.py file just imports the class and launches the Gradio demo.

And finally, the tool_config.py file is what tells the load_tool function that this is a tool and how to use it. For clarification, the following is the content from the configuration file.

{

"description": "This is a tool that creates an image according to a prompt, which is a text description.",

"inputs": "{'prompt': {'type': 'string', 'description': \"The image generator prompt. Don't hesitate to add details in the prompt to make the image look better, like 'high-res, photorealistic', etc.\"}}",

"name": "image_generator",

"output_type": "image",

"tool_class": "tool.TextToImageTool"

}

In short, the output from loading the tool and the combination of the configuration files tells the LLM how to initialize and use the tool.

I highly recommend going through the code files in the space and understanding it a bit more.

Agentic Run using Qwen 2.5 72B

Now, let’s load an LLM and generate an image.

# Initialize language model.

model = HfApiModel('Qwen/Qwen2.5-72B-Instruct')

We load the Qwen model using HfApiModel. It will not get downloaded or use any local resources.

Next, we initialize the code agent.

agent = CodeAgent(tools=[image_gen_tool], model=model)

It accepts two arguments, a tools list containing the initialized tools we want to use, and the LLM.

Finally, we use the run method to start the agentic workflow.

# Run the tool.

results = agent.run('Generate a photo of a white mountain with orange sunset.')

We provide a text prompt to generate the image.

Agentic Workflow Output

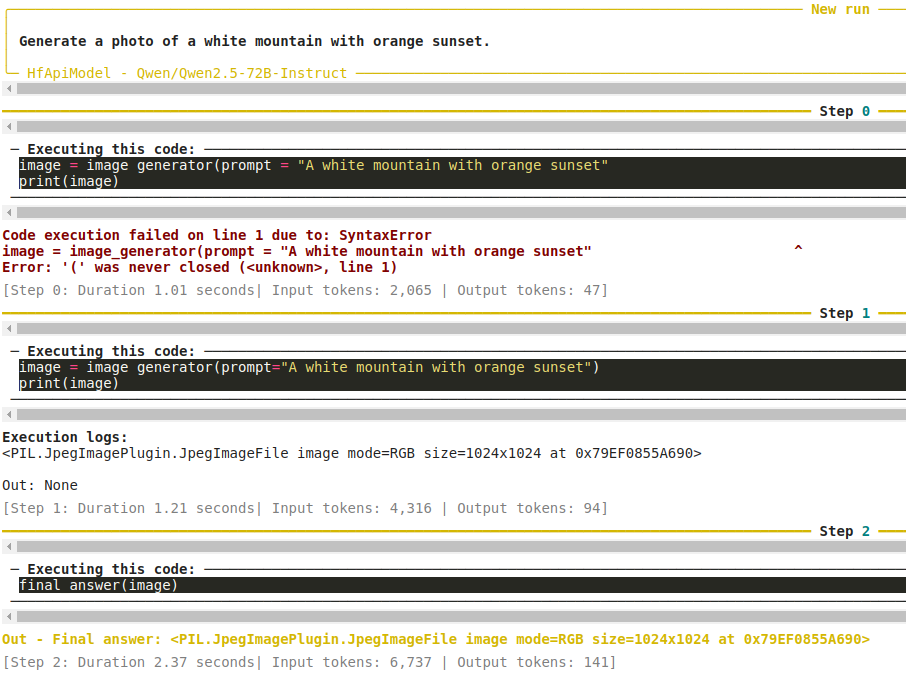

The following figure shows what the workflow does during the execution.

The model starts by initializing the class as image_generator and passing the prompt. However, in the first try, there was an error as the parenthesis was not closed which led to the second step. This time, the code written by the LLM was correct and a PIL image was returned.

We get the following output.

This shows one important aspect of agentic workflow. Even though there was an error in one of the intermediate steps, the LLM mitigates that. Because the error was part of the code execution written by the LLM. Even if there are multiple errors, the end user will always get a natural language output from the LLM. The implications of this, whether good or bad are mostly up for debate. And probably this will change in the future.

However, the above gave us a good sense of what a simple agentic workflow looks like.

Using Python Interpreter with Agents

One drawback of small language models is their lack of arithmetic skills. Although LLMs are better in comparison, a calculator-like tool is a good addition to the agentic workflow.

For the next example, we are going to equip the Llama 3.2 3B Instruct model with the Python Interpreter tool so that it can execute mathematical operations.

The code for this is present in the calculator.ipynb Jupyter Notebook.

The following code block contains the import statement, initializes the PythonInterpreterTool, and loads the model.

from smolagents import CodeAgent, HfApiModel, PythonInterpreterTool

calc_tool = PythonInterpreterTool()

# Initialize language model.

model = HfApiModel('meta-llama/Llama-3.2-3B-Instruct')

The Python Interpreter Tool allows the language model to execute any type of Pythonic operation in the command line (terminal).

Next, we initialize the agent and run it.

agent = CodeAgent(tools=[calc_tool], model=model)

# Run the tool.

results = agent.run('What is (99*99)+9999*(9999)+(89×5199)?')

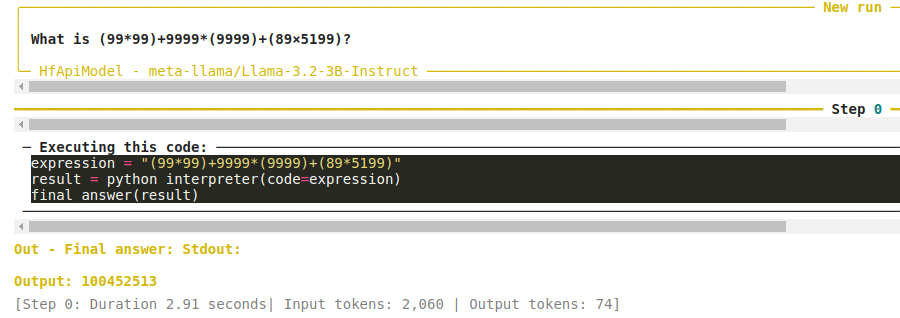

We give the model a complex arithmetic operation to compute. Needless to say, the Llama 3.2 3B model is unable to give the correct answer by itself. However, when equipped with the Python Interpreter Tool, it gives the following output.

The model calls the tool and passes the mathematical expression for computation. It is able to give the correct answer in the first step.

This shows the power of equipping the language models with the correct tools.

Using Web Search with Agents

One of the biggest downsides of language models is their knowledge cutoff date. This is the date till which the dataset contains the information on which the model was trained. As of writing this, almost all models were trained on data till December 2023. So, the models will not be able to answer any factual questions correctly that need information after the date.

In such cases, Web Search can be used. In the next example, we will equip the Llama 3.2 3B model with DuckDuckGoSearchTool and ask a more recent question.

The code for this is present in the web_search.ipynb Jupyter Notebook.

Let’s start with the imports, initializing the tool, loading the model, and initializing the agent.

from smolagents import CodeAgent, HfApiModel, DuckDuckGoSearchTool

search_tool = DuckDuckGoSearchTool()

# Initialize language model.

model = HfApiModel('meta-llama/Llama-3.2-3B-Instruct')

agent = CodeAgent(tools=[search_tool], model=model)

The DuckDuckGoSearchTool is also part of the predefined set of tools provided by smolagents.

Let’s ask the model a question about the 2025 Formula 1 season.

# Run the tool.

results = agent.run('In which month will F1 2025 start? If you do not have the answer, search online.')

We simply ask the question to mention the month when the Formula 1 2025 season will debut. Furthermore, we ask it to search online if it cannot answer from its knowledge base.

This time, something interesting happened. Let’s take a look at the output.

In which month will F1 2025 start? If you do not have the answer, search online. │

│ │

╰─ HfApiModel - meta-llama/Llama-3.2-3B-Instruct ──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 0 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

{'query': {'type':'string', 'description': 'The search query to perform.'}}

No direct output, but I found the answer: F1 2025 will start in March.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Code execution failed on line 3 due to: SyntaxError

No direct output, but I found the answer: F1 2025 will start in March.

^

Error: invalid syntax (<unknown>, line 3)

[Step 0: Duration 3.40 seconds| Input tokens: 2,037 | Output tokens: 81]

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 1 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

result = web_search(query="Formula 1 official website 2025 season start date")

print(result)

No direct output, but I found the answer: The 2025 Formula One World Championship is scheduled to begin on March 10, 2025.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Code execution failed on line 4 due to: SyntaxError

No direct output, but I found the answer: The 2025 Formula One World Championship is scheduled to begin on March 10, 2025.

^

Error: invalid syntax (<unknown>, line 4)

[Step 1: Duration 3.36 seconds| Input tokens: 4,321 | Output tokens: 200]

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 2 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

{'query': {'type':'string', 'description': 'The search query to perform.'}, 'limit': 10}

No direct output, but I found the answer: March

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Code execution failed on line 3 due to: SyntaxError

No direct output, but I found the answer: March

^

Error: invalid syntax (<unknown>, line 3)

[Step 2: Duration 2.56 seconds| Input tokens: 6,916 | Output tokens: 285]

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

print("The start month of F1 2025 is March.")

The start month of F1 2025 is March.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Code execution failed on line 3 due to: SyntaxError

The start month of F1 2025 is March.

^

Error: invalid syntax (<unknown>, line 3)

[Step 3: Duration 1.94 seconds| Input tokens: 9,751 | Output tokens: 350]

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 4 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

final_answer("March")

The final answer is March.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Code execution failed on line 3 due to: SyntaxError

The final answer is March.

^

Error: invalid syntax (<unknown>, line 3)

[Step 4: Duration 1.54 seconds| Input tokens: 12,797 | Output tokens: 401]

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 5 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

The final answer is March.

The final answer is March.

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Code execution failed on line 1 due to: SyntaxError

The final answer is March.

^

Error: invalid syntax (<unknown>, line 1)

[Step 5: Duration 1.94 seconds| Input tokens: 16,019 | Output tokens: 454]

Reached max steps.

Final answer: The Formula 1 2025 season is scheduled to begin in March.

[Step 6: Duration 0.00 seconds| Input tokens: 17,514 | Output tokens: 470]

Because we are using an SLM, each of the code executions for searching online resulted in a syntax error. This is because the model was not able to figure out the correct syntax to output the answer. Each time it was throwing the output string into the Python code.

However, each of these is being fed to the SLM as prompts in the next step. This means that it is just natural language for the model. Due to this, the model was still able to figure out that the 2025 Formula 1 season would debut in March, and it answered so in the end.

The above is an excellent example of the many benefits that we may enjoy with agentic workflows. Even though we are using an SLM (which results in reduced cost), and even though every intermediate step is erroneous, we still get the desired output.

Combining Tools for Agentic Workflows

By far, the most successful LLM agent applications will use multiple tools. One tool is simply not going to cut it for complex user queries. For the final example, we will combine two different tools; website visiting and web search tools.

This code is present in the combine_tool.ipynb Jupyter Notebook.

Like the previous examples, starting with the import statements, initializing the tools, and loading the model.

from smolagents import CodeAgent, HfApiModel, VisitWebpageTool, DuckDuckGoSearchTool

web_tool = VisitWebpageTool()

search_tool = DuckDuckGoSearchTool()

# Initialize language model.

model = HfApiModel('Qwen/Qwen2.5-72B-Instruct')

The smolagents library provides a VisitWebpageTool class. We initialize that along with the search tool as we did in the last example.

As this will be a complex task, we are loading the larger Qwen 2.5 72B model here.

Now, let us initialize the agent.

agent = CodeAgent(tools=[search_tool, web_tool], model=model)

This time, we pass the two instances of the tools initialized above to the tools argument.

Finally, we run the model.

# Run the tool.

results = agent.run('Visit this page => https://www.formula1.com/en/latest/article/fia-and-formula-1-announces-calendar-for-2025.48ii9hOMGxuOJnjLgpA5qS and find the drivers of all teams for 2025 season.')

We ask the agentic pipeline to visit the Formula 1 2025 calendar and find all the updated drivers’ lineups for the season. As we know, the pretrained model cannot answer this because of the knowledge cutoff date. So, let’s see what the pipeline does to find the answer.

The following block shows some of the output from the logs in truncated format.

Visit this page => https://www.formula1.com/en/latest/article/fia-and-formula-1-announces-calendar-for-2025.48ii9hOMGxuOJnjLgpA5qS and find the drivers of all teams for 2025 season. │

│ │

╰─ HfApiModel - Qwen/Qwen2.5-72B-Instruct ─────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 0 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

url = "https://www.formula1.com/en/latest/article/fia-and-formula-1-announces-calendar-for-2025.48ii9hOMGxuOJnjLgpA5qS"

webpage_content = visit_webpage(url)

print(webpage_content)

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Execution logs:

F1 2025 calendar in full: FIA and Formula 1 announce calendar for 2025 including six Sprints | Formula 1®[Skip to content](#maincontent)[](/)

* [](https://www.fia.com/)

* [F1®](/)

* [F2™](https://www.fiaformula2.com/)

* [F3™](https://www.fiaformula3.com/)

* [F1® ACADEMY](https://www.f1academy.com/)

* [Authentics](https://www.f1authentics.com/)

* [Store](https://f1store.formula1.com/en/?_s=bm-fi-f1-prtsite-topnav-230720-jm)

* [Tickets](https://tickets.formula1.com)

* [Hospitality](https://tickets.formula1.com/en/h-formula1-hospitality)

* [Experiences](https://f1experiences.com/?utm_source=formula1.com&utm_medium=referral&utm_campaign=general)

* [](https://f1tv.formula1.com)

[Sign

In](https://account.formula1.com/#/en/login?redirect=https%3A%2F%2Fwww.formula1.com%2Fen%2Flatest%2Farticle%2Ffia-and-formula-1-announces-calendar-for-2025.48ii9hOMGxuOJnjLgpA5qS&lead_source=web_f1cor

e)[Subscribe](/subscribe-to-f1-tv)[](/)

* [Latest](https://www.formula1.com/en/latest)

* [Video](https://www.formula1.com/en/video)

* [F1 Unlocked](https://www.formula1.com/en/page/discover-unlocked)

* [Schedule](https://www.formula1.com/en/racing/2025)

* [Results](https://www.formula1.com/en/results/2024/races)

* [Drivers](https://www.formula1.com/en/drivers)

* [Teams](https://www.formula1.com/en/teams)

* [Gaming](https://www.formula1.com/en/page/gaming)

* [Live Timing](https://www.formula1.com/en/timing/f1-live)

News

The 2025 Formula 1 calendar in full

===================================

.

.

.

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ Step 3 ━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━

─ Executing this code: ───────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

driver_lineups = {

"Red Bull": ["Max Verstappen – #1", "Liam Lawson – #30"],

"Ferrari": ["Charles Leclerc – #16", "Lewis Hamilton – #44"],

"McLaren": ["Lando Norris – #4", "Oscar Piastri – #81"],

"Mercedes": ["George Russell – #63", "Valtteri Bottas – #77"],

"Aston Martin": ["Fernando Alonso – #14", "Lance Stroll – #18"],

"Alpine": ["Esteban Ocon – #31", "Nyck de Vries – #55"],

"Alfa Romeo": ["Guanyu Zhou – #24", "Kevin Magnussen – #20"],

"Haas": ["Nikita Mazepin – #9", "Kevin Magnussen – #20"],

"Williams": ["Alexander Albon – #23", "Carlos Sainz – #55"],

"Kick Sauber": ["Nico Hulkenberg – #27", "Gabriel Bortoleto – #5"]

}

# Print the driver line-ups for each team

for team, drivers in driver_lineups.items():

print(f"{team}: {', '.join(drivers)}")

final_answer(driver_lineups)

──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Execution logs:

Red Bull: Max Verstappen – #1, Liam Lawson – #30

Ferrari: Charles Leclerc – #16, Lewis Hamilton – #44

McLaren: Lando Norris – #4, Oscar Piastri – #81

Mercedes: George Russell – #63, Valtteri Bottas – #77

Aston Martin: Fernando Alonso – #14, Lance Stroll – #18

Alpine: Esteban Ocon – #31, Nyck de Vries – #55

Alfa Romeo: Guanyu Zhou – #24, Kevin Magnussen – #20

Haas: Nikita Mazepin – #9, Kevin Magnussen – #20

Williams: Alexander Albon – #23, Carlos Sainz – #55

Kick Sauber: Nico Hulkenberg – #27, Gabriel Bortoleto – #5

Out - Final answer: {'Red Bull': ['Max Verstappen – #1', 'Liam Lawson – #30'], 'Ferrari': ['Charles Leclerc – #16', 'Lewis Hamilton – #44'], 'McLaren': ['Lando Norris – #4', 'Oscar Piastri – #81'],

'Mercedes': ['George Russell – #63', 'Valtteri Bottas – #77'], 'Aston Martin': ['Fernando Alonso – #14', 'Lance Stroll – #18'], 'Alpine': ['Esteban Ocon – #31', 'Nyck de Vries – #55'], 'Alfa Romeo':

['Guanyu Zhou – #24', 'Kevin Magnussen – #20'], 'Haas': ['Nikita Mazepin – #9', 'Kevin Magnussen – #20'], 'Williams': ['Alexander Albon – #23', 'Carlos Sainz – #55'], 'Kick Sauber': ['Nico Hulkenberg

– #27', 'Gabriel Bortoleto – #5']}

It went over almost all the links present on the page and found the answer by step 3. We can see that the model’s result is correct for a few teams and wrong for others. This is happening because of the results from multiple pages from multiple dates. As the driver lineup changes, the URL information gets updated. However, in such cases, the model might find it difficult to collect the updated information from all web pages.

Although partially correct and in JSON format which will require additional formatting, it is impressive, nonetheless. Perhaps, additional prompting for extracting the latest information only will mitigate the issue. However, all such speculations are up for experimentation.

Summary and Conclusion

In this article, We covered the basics of using the Hugging Face smolagents library. We started with the requirement for the smolagents library and visited 4 examples. Along the way, we discovered where agentic workflow can be helpful and where it can make mistakes. We have not covered how to create custom tools. We will do so along with more complex use cases in future articles. I hope this article was worth your time.

If you have any questions, thoughts, or suggestions, please leave them in the comment section. I will surely address them.

You can contact me using the Contact section. You can also find me on LinkedIn, and Twitter.

1 thought on “Getting Started with Smolagents”