This article is going to be straightforward. We are going to do what the title says – we will be pretraining the DINOv2 model for semantic segmentation. We have covered several articles on training DINOv2 for segmentation. These include articles for person segmentation, training on the Pascal VOC dataset, and carrying out fine-tuning vs transfer learning experiments as well. Although DINOv2 offers a powerful backbone, pretraining the head on a larger dataset can lead to better results on downstream tasks.

In this article, we will pretrain a simple Linear Semantic Segmentation head on the COCO dataset.

- First, we will make the necessary modifications to the model and the training script. We will adapt the semantic segmentation pretraining scripts from Torchvision.

- Second, we will pretrain the segmentation head on top of DINOv2 features for 30 epochs.

- Third, we will run inference on images and videos.

The article will cover all the hyperparameters, necessary changes, and adaptations you will need for your experiments.

The COCO Dataset for Semantic Segmentation

The MS COCO dataset is primarily known for object detection. However, the Torchvision reference scripts also provide code for pretraining models for semantic segmentation tasks on the same dataset.

The process is quite simple.

- First, copy/clone the Torchvision reference scripts for semantic segmentation.

- Next, we download the MS COCO dataset.

- Finally, we run the training script while providing the path to the dataset.

The script automatically converts the dataset into a semantic segmentation one as the JSON annotation files contain the mask data. The only caveat is that instead of the 81 classes in the COCO dataset, it trains on the 21 classes from the Pascal VOC dataset. This corresponds to around 80000 images from the COCO dataset.

Of course, we will need to adapt the script to a certain extent as we are not using the models directly available in Torchvision. You can find some more details about creating a custom LRASPP segmentation model pretraining here.

You can download the dataset from here on Kaggle. The following is the directory structure after extracting it.

coco2017/ ├── annotations │ ├── captions_train2017.json │ ├── captions_val2017.json │ ├── instances_train2017.json │ ├── instances_val2017.json │ ├── person_keypoints_train2017.json │ └── person_keypoints_val2017.json ├── test2017 [40670 entries exceeds filelimit, not opening dir] ├── train2017 [118287 entries exceeds filelimit, not opening dir] └── val2017 [5000 entries exceeds filelimit, not opening dir]

If you wish to get more details on pretraining on the Pascal VOC dataset as well, this article on Training FasterViT on VOC Segmentation Dataset will surely help.

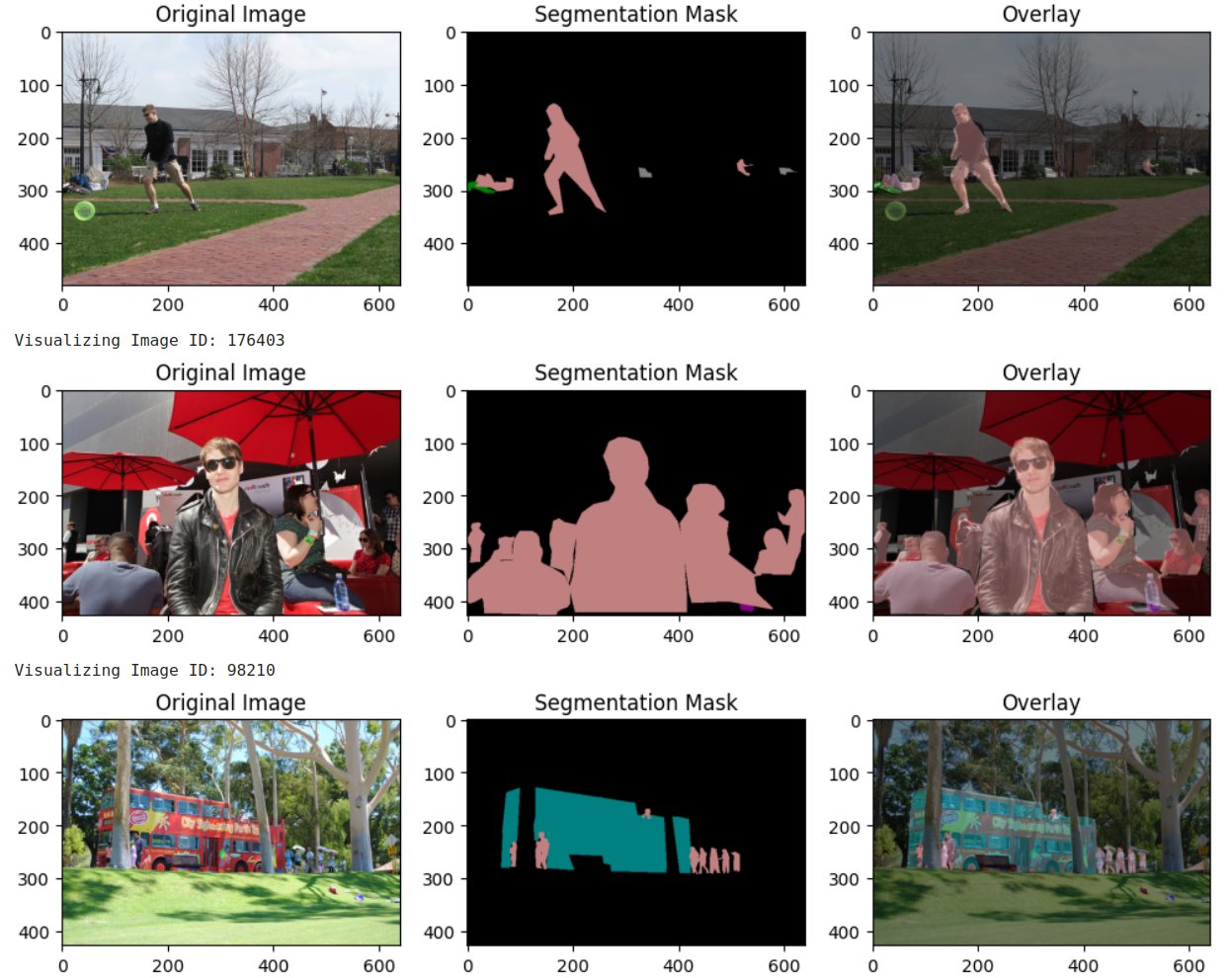

Following are some samples from the COCO dataset along with their segmentation masks.

Project Directory Structure

The following block shows the project directory structure.

├── coco2017 │ ├── annotations │ ├── test2017 │ ├── train2017 │ └── val2017 ├── inference_data │ ├── images │ └── videos ├── models │ ├── dinov2_seg.py │ └── model_config.py ├── notebooks │ └── visualizations.ipynb ├── outputs │ ├── dinov2_seg_pretrain │ ├── dinov2_seg_pretrain_finetune │ └── inference_results_video ├── coco_utils.py ├── config.py ├── custom_utils.py ├── inference_image.py ├── inference_video.py ├── __init__.py ├── presets.py ├── README.md ├── requirements.txt ├── train.py ├── transforms.py ├── utils.py └── v2_extras.py

- The

coco2017directory contains the extracted MS COCO dataset. - The

inference_datadirectory contains the images and videos that we will use for inference after pretraining the DINOv2 model for semantic segmentation. - Directly inside the project directory, we have all the Torchvision reference scripts for training.

- The

modelsdirectory contains the code for DINOv2 segmentation modeling. - The

outputsdirectory contains all the pretraining and inference results. - Finally, the

notebooksdirectory has a Jupyter Notebook for dataset visualization.

You do not need to download the Torchvision inference scripts on your own. The code covered here has been adapted for pretraining DINOv2 for semantic segmentation and a zipped file with the code, final model checkpoint, and inference data is provided via the download section. If you wish to carry out training on your own, you need to download the dataset and arrange it in the above structure.

Download Code

Installing Necessary Requirements

You can install all the major libraries via the requirements file.

pip install -r requirements.txt

That’s all the setup that we need. Let’s jump into some of the important coding parts.

Pretraining DINOv2 for Semantic Segmentation

The codebase is quite large, so, we will cover only the necessary parts here.

The DINOv2 Semantic Segmentation Model

We have already covered the DINOv2 segmentation model in previous articles. Some very minor changes were made to this project.

The primary model code resides in the models/dinov2_seg.py file.

Let’s take a look at the segmentation head in the file.

class LinearClassifierToken(torch.nn.Module):

def __init__(self, in_channels, nc=1, tokenW=32, tokenH=32):

super(LinearClassifierToken, self).__init__()

self.in_channels = in_channels

self.W = tokenW

self.H = tokenH

self.nc = nc

self.conv = torch.nn.Conv2d(in_channels, nc, (1, 1))

def forward(self,x):

outputs = self.conv(

x.reshape(-1, self.in_channels, self.H, self.W)

)

upsampled_logits = nn.functional.interpolate(

outputs, size=(self.H*14, self.W*14),

mode='bilinear',

align_corners=False

)

return {'out': upsampled_logits}

The nc parameter defines the number of classes in the dataset and is now configurable while initializing the model.

However, our scripts (in other code files) hardcode the image resolution that DINOv2 with ViT-Small accepts, which is 644×644. That is one major constraint that we look forward to removing in future versions of DINO projects.

The Training Script

We make some minor changes to the training script (train.py) as well. The places in the code have been commented as # NOTE for easier recognition.

The first change is loading our own model.

# NOTE: We are loading our own model here. # model = torchvision.models.get_model( # args.model, # weights=args.weights, # weights_backbone=args.weights_backbone, # num_classes=num_classes, # aux_loss=args.aux_loss, # ) model = Dinov2Segmentation(num_classes=num_classes, fine_tune=args.fine_tune) summary(model)

As our model does not contain a backbone attribute, we need to change the parameters that need to be optimized.

# NOTE: We are updating how to handle the model parameter update.

# params_to_optimize = [

# {"params": [p for p in model_without_ddp.backbone.parameters() if p.requires_grad]},

# {"params": [p for p in model_without_ddp.classifier.parameters() if p.requires_grad]},

# ]

params_to_optimize = [

{"params": [p for p in model_without_ddp.parameters() if p.requires_grad]}

]

These are all the major changes in the training script.

The Presets Configuration

The presets.py file handles the dataset transforms. We comment out the random cropping as that would throw shape error in the case of DINOv2 model.

Also, we hardcode the image resolution to 644×644.

Training the Model

The training shown here was carried out on a machine with 10GB RTX 3080 GPU, 32GB RAM, and an i7 10th generation CPU.

You can execute the following command to start the training.

torchrun --nproc_per_node=1 train.py --lr 0.02 --dataset coco -b 32 -j 8 --amp --output-dir outputs/dinov2_seg_pretrain --data-path coco2017 --use-v2

As we are training just the segmentation head here, so, we use a higher learning rate of 0.02. The --data-path defines the path to the dataset. The batch size is 32 with 8 workers for data loading. We train for 30 epochs. After training, the checkpoints are stored in outputs/dinov2_seg_pretrain directory.

This training run took around 24 hours to complete. Only 64,554 parameters of the segmentation head were trained while keeping the backbone frozen.

After the final epoch, the mean IoU on the validation dataset was 67.5%.

Inference Experiments

We have two scripts for inference, inference_image.py for running inference on images, and inference_video.py for running inference on videos. Both scripts accept similar command line arguments. One is the path to the model checkpoint, the image inference script accepts a directory path containing images, and the video inference script accepts the path to a video file.

Running Inference on Images using the Pretrained DINOv2 Semantic Segmentation Model

To start running inference on images, we execute the following script:

python inference_image.py --model outputs/dinov2_seg_pretrain/checkpoint.pth --input inference_data/images/

The following are the three image results on which we ran inference.

In this case, there are three children and a dog. The model can segment all of them well. But we can see the segmentation maps of the boy’s legs at the bottom are imperfect because of the occlusion by the plants. Even the dog’s segmentation maps are not good enough. This shows issues with partially occluded and small objects.

Here, we have a person and two horses. Upon detailed observation, we can see how the model suffers from imperfect segmentation of the horse’s legs. This shows issues in segmenting thin structures.

Finally, we have a more challenging small object scene here. The people are smaller here and the vehicles are closer together. The model is segmenting the vehicles as one large blob and combining the segmentation maps of multiple persons as well.

We will try to perfect these issues in future projects.

Running Inference on Videos using the Pretrained DINOv2 Semantic Segmentation Model

Now, let’s run inference on videos.

python inference_video.py --model outputs/dinov2_seg_pretrain/checkpoint.pth --input inference_data/videos/video_2.mp4

We start with a simple video with horses.

Although the segmentation results look good, we can see some dilation at the borders of the segmentation map, as if the segmentation is bleeding out of the object.

Let’s take a look at another instance.

python inference_video.py --model outputs/dinov2_seg_pretrain/checkpoint.pth --input inference_data/videos/video_1.mp4

Although not as prominent, we can see similar issue here as well.

Most probably, we can resolve this issue via fine-tuning.

Furthermore, the above experiments were run on an RTX 3080 GPU and we are getting only 28 FPS on average. This indicates that the model in its current state may not be optimal for real-time applications without further optimization.

More Articles on DINOv2

- DINOv2 for Image Classification: Fine-Tuning vs Transfer Learning

- DINOv2 for Semantic Segmentation

- DINOv2 Segmentation – Fine-Tuning and Transfer Learning Experiments

Summary and Conclusion

In this article, we pretrained a DINOv2 semantic segmentation head on the MS COCO dataset. We started with the dataset exploration, discussed the changes made to the model, trained it, and ran inference. We also discussed the issues with the results that we will try to resolve in future projects. I hope this article was worth your time.

If you have any questions, thoughts, or suggestions, please leave them in the comment section. I will surely answer them.

You can contact me using the Contact section. You can also find me on LinkedIn, and Twitter.