In this article, we create an instruction following Jupyter Notebook interface to prompt a fine-tuned Phi 1.5 model. ...

Instruction Following Notebook Interface using Phi 1.5

In this article, we create an instruction following Jupyter Notebook interface to prompt a fine-tuned Phi 1.5 model. ...

In this article, we cover how to get started with Ollama locally on a Ubuntu environment. We cover downloading models, different model, tags, and VLMs like Llava7B as well. ...

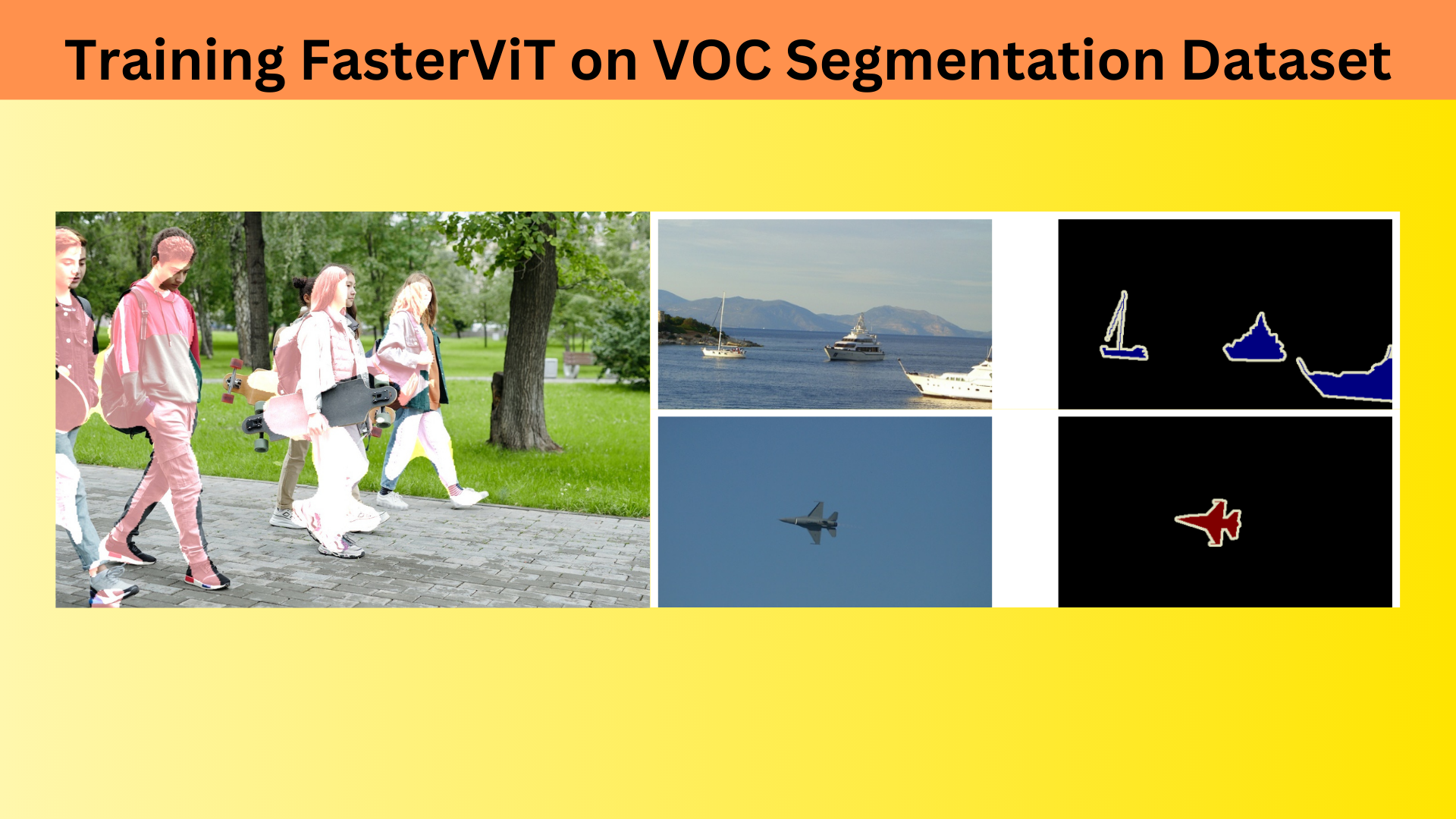

In this article, we train the FasterViT on the Pascal VOC semantic segmentation dataset using the PyTorch Deep Learning framework. ...

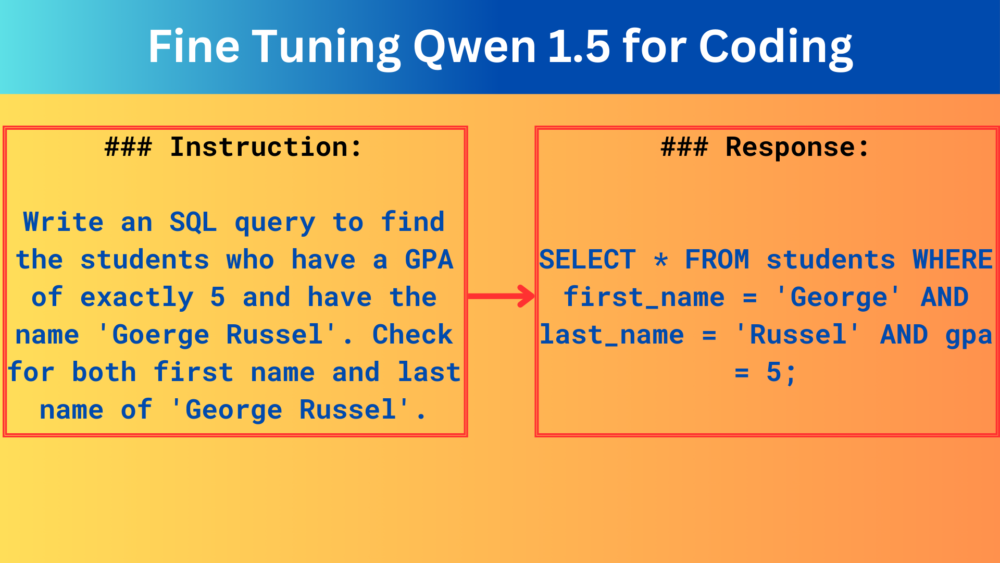

In this article, we are fine tuning the Qwen 1.5 0.5B model on the CodeAlpaca dataset for coding. We use the Hugging Face Transformers SFT Trainer pipeline. ...

In this article, we are fine tuning the Phi 1.5 model using QLoRA on the Stanford Alpaca dataset with Hugging Face Transformers. ...

Business WordPress Theme copyright 2025