In this article, we are instruction tuning the OpenELM-450M on the Alpaca dataset and build a Gradio demo for inference. ...

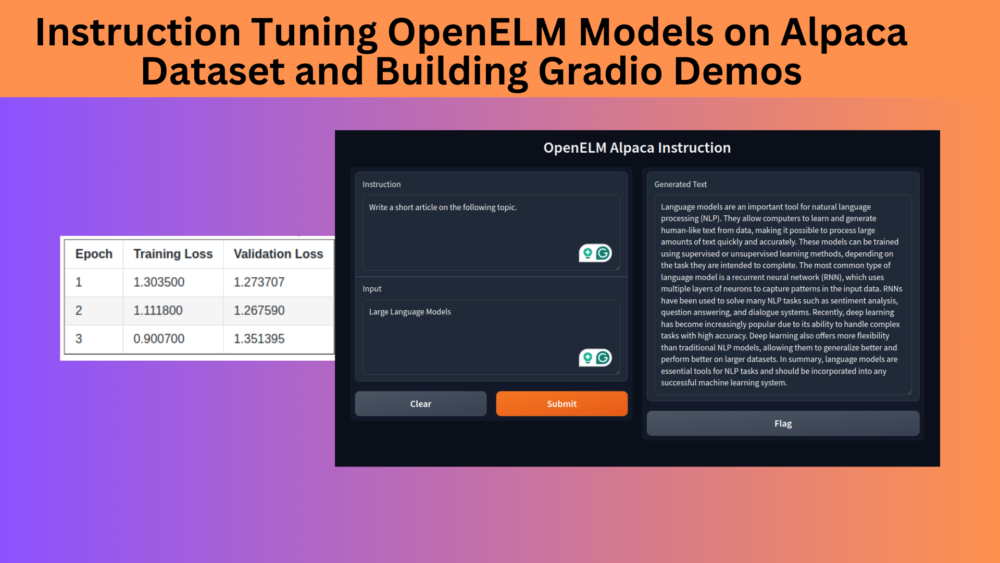

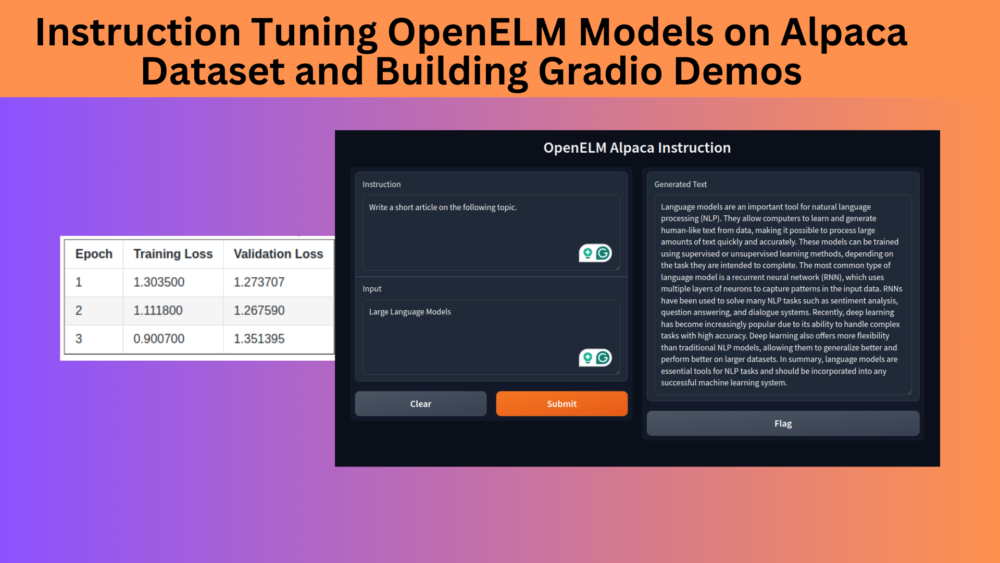

Instruction Tuning OpenELM Models on Alpaca Dataset and Building Gradio Demos

In this article, we are instruction tuning the OpenELM-450M on the Alpaca dataset and build a Gradio demo for inference. ...

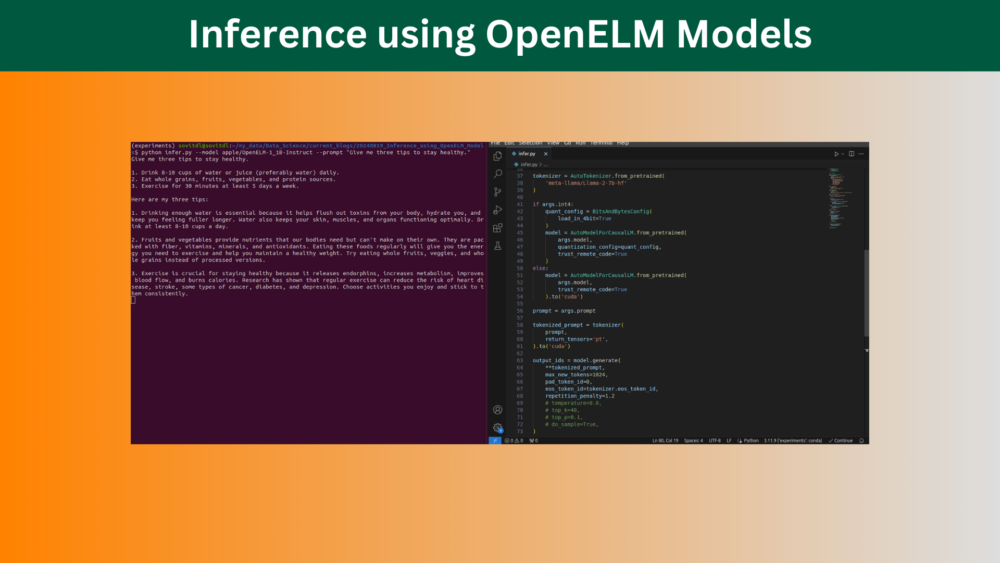

In this article, we run inference using the Base and Instruction tuned OpenELM models with the Hugging Face library. ...

In this article, we explore the OpenELM model from Apple. We go through the model's scaling strategy, the pretraining datasets, benchmark results, and where the model falls short. ...

In this article, we are fine tuning the LRASPP MobileNetV3 model on the entire IDD (Indian Driving Dataset) for Semantic Segmentation. ...

In this article, we are training the LRASPP MobileNetV3 model on a subset of the Indian Driving Dataset for semantic segmentation. ...

Business WordPress Theme copyright 2025