In this article, we will run code inference using the SmolVLM2 models. We will run inference using several SmolVLM2 models for text, image, and video understanding.

Here, rather than diving deep into the model theory and architecture, we will jump right into the code. This will give us a first-hand experience of the capabilities of the model and where it is lacking.

What will we cover in SmolVLM2 code inference?

- What models are available in the SmolVLM2 family?

- What tasks are they capable of?

- Text, image, and video understanding using SmolVLM2 for several tasks like image & video captioning, OCR, and text extraction.

The SmolVLM2 Family of Models

SmolVLM2 contains four different models, three in the instruct series and one base model:

- SmolVLM2-2.2B-Instruct

- SmolVLM2-500M-Video-Instruct

- SmolVLM2-256M-Video-Instruct

- SmolVLM2-2.2B-Base

The version 2 models are an upgrade to the SmolVLM models and are trained especially for video understanding.

In this article, we will run inference experiments for different use cases and compare the results between the 2.2B and 256M instruct models.

Directory Structure

The following is the directory structure for the project directory.

├── input │ ├── image_1.jpg │ ├── image_2.jpeg │ ├── image_3.jpg │ └── video_1.mp4 ├── README.md └── smolvlm2_inference.py

- We have an

inputdirectory with all the images and videos that we will use for inference. - A single Python script,

smolvlm2_inference.pycontains all the code that we need. - The

README.mdfile contains the links to the image and video sources along with the prompts used in this article.

The download code section allows you to download the script and data used for inference.

Download Code

Installing Requirements

The code in this article uses PyTorch 2.5.1 and Torchvision 0.20.1. Along with that, we need the latest verions of Transformers and a package called num2words for multimodal inference.

pip install -U transformers num2words

Inference using SmolVLM2

Let’s get started with the inference section using SmolVLM2. All the code is present in the smolvlm2_inference.py file.

The following block contains the entire code:

import argparse

import torch

from transformers import AutoProcessor, AutoModelForImageTextToText

parser = argparse.ArgumentParser()

parser.add_argument(

'--model',

help='hugging face model id',

default='HuggingFaceTB/SmolVLM2-2.2B-Instruct'

)

parser.add_argument(

'--input',

help='series of input of images and videos',

nargs='+',

required=True

)

parser.add_argument(

'--prompt',

help='user prompt',

required=True

)

args = parser.parse_args()

model_path = args.model

processor = AutoProcessor.from_pretrained(model_path)

model = AutoModelForImageTextToText.from_pretrained(

model_path,

torch_dtype=torch.bfloat16,

_attn_implementation='flash_attention_2'

).to('cuda')

image_ext = ['.jpg', 'jpeg', '.png']

video_ext = ['.mp4', '.avi']

# Creating the content list for single/multiple inputs.

content = []

# At the moment, the model can either process multiple images or multiple

# videos but cannot process a mix of both.

for input_path in args.input:

for ext in image_ext:

if ext in input_path:

content_type = {'type': 'image', 'url': input_path}

content.append(content_type)

for ext in video_ext:

if ext in input_path:

content_type = {'type': 'video', 'path': input_path}

content.append(content_type)

# # Add the user prompt.

content.append(

{'type': 'text', 'text': args.prompt}

)

messages = [

{

'role': 'user',

'content': content

},

]

inputs = processor.apply_chat_template(

messages,

add_generation_prompt=True,

tokenize=True,

return_dict=True,

return_tensors='pt',

).to(model.device, dtype=torch.bfloat16)

generated_ids = model.generate(

**inputs,

do_sample=False,

max_new_tokens=512

)

# Trim the generated ids to remove the input ids

trimmed_generated_ids = [

generated_ids[len(in_ids):] \

for in_ids, generated_ids in zip(inputs.input_ids, generated_ids)

]

generated_texts = processor.batch_decode(

trimmed_generated_ids,

skip_special_tokens=True,

)

print(generated_texts[0])

Understanding the Above Code (Steps for inference using SmolVLM2)

Let’s go through some of the important sections of the codebase.

- Import statements and argument parsers (lines 1 to 23): We start with the necessary imports and define the command line arguments. We can pass any model from the SmolVLM2 family using the

--modelcommand line argument. With--input, we can pass either multiple images or videos for interleaved inference. And the--promptargument allows us to pass the user prompt. - Loading the model and processor (lines 25 to 31): Next, we load the SmolVLM2 model and processor and transfer the model to the CUDA device.

- Preparing the message prompt (lines 33 to 61): We define different extensions for images and videos so that we are not restricted to any one format. As we can pass multiple images and videos for interleaved inference, we run all the inputs through a

forloop and create thecontentlist of themessagesthat we need to feed to the model. This is necessary because the syntax for image and video inputs is different, as we may see in lines 44 and 48. In the end, we append the user prompt. - Processing the inputs and forward pass through the model (lines 64 to 76): Next, we prepare the inputs by calling the

apply_chat_templatefunction of the SmolVLM2 processor. Then we pass the inputs through the model to generate the output. - Decoding the output (lines 78 to 89): We trim the generated tokens to exclude the input user prompt. Finally, we decode the tokens generated by the model and print the output.

This is all the code that we need before executing the code.

Inference with SmolVLM2-2.2B-Instruct

We will start with the largest model with 2.2B parameters. Even the largest model can run within 8GB VRAM in FP16/BF16 precision.

All the following experiments were run on a machine with a 10GB RTX 3080 GPU.

Image Description Experiment

Let’s ask the model to describe the following image.

We can use the following command for this.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-2.2B-Instruct --input input/image_1.jpg --prompt "Describe this image"

We get the following output.

The image depicts a close-up view of a cluster of purple flowers, likely a type of chrysanthemum, with a bee in the foreground. The flowers are in full bloom, showcasing a vibrant purple hue with a yellow center, which is typical of many chrysanthemum species. The bee, which appears to be a honeybee, is actively collecting nectar from the flowers. The bee is positioned on the right side of the image, with its body oriented towards the center of the cluster of flowers. The bee's wings are slightly blurred, indicating motion, and its antennae are clearly visible. The background of the image is blurred, creating a bokeh effect that emphasizes the flowers and the bee. The background is composed of various shades of purple and green, suggesting a garden or a natural outdoor setting. The green elements in the background could be leaves or other plants, contributing to the overall natural ambiance of the scene. The image captures a moment of interaction between the bee and the flowers, highlighting the bee's role as a pollinator. The bee's presence on the flowers suggests that it is likely collecting nectar, which is a vital resource for bees as they use it for energy and to sustain their hives. The flowers, in turn, provide the bee with a source of food. The image also showcases the beauty of nature and the intricate relationships within ecosystems. The presence of the bee on the flowers indicates a healthy and thriving environment, where different species coexist and contribute to each other's survival. In summary, the image is a detailed and vibrant depiction of a bee interacting with purple flowers, set against a blurred natural background. The focus on the bee and the flowers emphasizes the importance of pollination and the delicate balance of ecosystems.

The description is quite detailed, although some of the described elements are not present in the image. Still, for a 2.2B model, it is quite impressive.

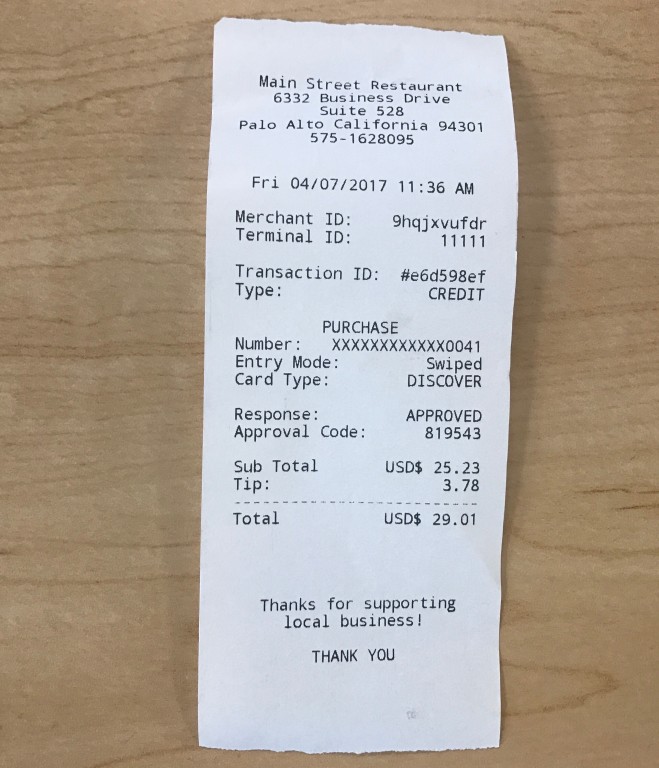

Receipt OCR Experiment

For the next experiment, we will provide the model with the following image and ask it to carry out OCR.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-2.2B-Instruct --input input/image_2.jpeg --prompt "Give OCR results of this image and no additional text"

The prompt has to be structured in the above way so that the model just OCRs the image without extra text.

The following block shows the output.

Main Street Restaurant 6332 Business Drive Suite 528 Palo Alto California 94301 575-1628095 Fri 04/07/2017 11:36 AM Merchant ID: 9hqjxvufdr Terminal ID: 11111 Transaction ID: #e6d598ef CREDIT PURCHASE Entry Mode: Swiped Card Type: DISCOVER Response: APPROVED Approval Code: 819543 Sub Total USD$ 25.23 Tip: 3.78 Total USD$ 29.01

The OCR result is accurate, however, there is a major problem. The model misses out the structure and new lines entirely. It is one complete line of string without any breaks, which is certainly undesirable in real-life settings.

Interleaved Image Inference

Let’s carry out inference by interleaving two images into a single message prompt and asking the model what it sees. One of the images is of the bee that we saw earlier, and the other one is a black and white image of two horses.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-2.2B-Instruct --input input/image_1.jpg input/image_3.jpg --prompt "What do these two images show?"

When we interleave two images, the final message has the following structure.

[

{

"role": "user",

"content": [

{

"type": "image",

"url": "input/image_1.jpg"

},

{

"type": "image",

"url": "input/image_3.jpg"

},

{

"type": "text",

"text": "What do these two images show?"

}

]

}

]

The content key now contains three dictionaries, two for the images, and one for the user prompt.

The following is the result that we get.

The first image shows a bee on a flower, while the second image shows two horses running in the field.

The description is quite apt. However, this is not always the case. Because SmolVLM2 is just a 2.2B parameter model, a slight change in the prompt can change the answer drastically. For example, here is another attempt with the same images but with a different user prompt.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-2.2B-Instruct --input input/image_1.jpg input/image_3.jpg --prompt "Describe these elements"

We ask the model to describe the elements and get the following result.

The image presents a serene scene of two horses galloping across a field. The horses, both in shades of black and white, are the main focus of the image. The horse on the left is slightly ahead of the other, creating a sense of motion and direction. The horse on the right is slightly behind, adding to the dynamic nature of the scene. The field they are running through is expansive and open, with a few trees visible in the background. The trees, though distant, add depth to the image and provide a sense of the vastness of the field. The image is in black and white, which adds a timeless quality to the scene. The absence of color allows the viewer to focus on the shapes and forms of the horses and the field, rather than being distracted by color. Overall, the image captures a moment of freedom and movement, with the horses running through a field under a clear sky. The use of black and white adds a dramatic effect, emphasizing the motion and energy of the scene.

The model describes only the image of the horses. It does not say anything about the bee image.

Video Description and Counting

For the final experiments using SmolVLM2-2.2B, we will first give it the following video to describe.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-2.2B-Instruct --input input/video_1.mp4 --prompt "Decsribe this video"

We get the following result.

The video begins with a wide shot of a vast, open field under a cloudy sky. In the distance, a line of trees and a building can be seen. Two horses, one white and one brown, are seen running across the field. The white horse is ahead of the brown horse, and both horses are moving quickly. The scene then transitions to a close-up of the white horse, which is now alone in the field. The brown horse is no longer visible. The white horse continues to run across the field, and the camera follows its movement. The video concludes with the white horse still running across the field, with the brown horse no longer visible.

The model gives a detailed description of the video. However, it hallucinates. Instead of three, it describes only two horses, and the brown horse never moves out of the camera.

Let’s prompt the model in a different way and check whether it is able to detect all the horses accurately.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-2.2B-Instruct --input input/video_1.mp4 --prompt "How many horses are there in this video and what are there colors?"

This time we ask it to count the horses and describe their colors.

There are three horses in the video, one white, one brown, and one grey.

Interestingly, this time the model is completely correct. This shows how much correct prompting might be necessary when dealing with small Vision Language Models.

Inference with SmolVLM2-256M-Instruct

We will carry out some of the above experiments with the smallest model in the family, SmoLVLM2-256M. This will give us a good idea of the gap in the capabilities of the largest and smallest models in the series.

OCR Experiment

Let’s start with the OCR experiment.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-256M-Video-Instruct --input input/image_2.jpeg --prompt "Give OCR results of this image and no additional text"

We get the following result.

The image is a receipt from Main Street Restaurant located at 6332 Business Drive, Suite 528, Palo Alto, California 94301. The receipt is dated Fri 04/07/2017 at 11:36 AM and has a transaction ID of 9qhjvu4f 11111. The transaction type is "Purchase" with a purchase number of XXXXXXXXXXXXXXXXXXXXX04. The card type is "Swiped" with a card number of 0041. The transaction amount is $25.23, and the total amount is $29.01. The receipt also includes a note that thanks for supporting local business!

Instead of just the OCR result, we get a description of the image. This is not ideal for most cases. This requires further fine-tuning. If you are interested in such a use case, you should read how we can fine-tune SmolVLM-256M for receipt OCR.

Counting in Video

The final experiment involves asking the model to count and describe the color of the horses in the video.

python smolvlm2_inference.py --model HuggingFaceTB/SmolVLM2-256M-Video-Instruct --input input/video_1.mp4 --prompt "How many horses are there in this video and what are there colors?"

The following is the result.

There are three horses in the video.

The count is correct, however, the model does not say anything about the color of the horses.

Takeaways and Further Improvements

From the above, it is clear that the new iteration of the SmolVLM2 family can give impressive results with minimal VRAM requirements. They can even be easily deployed on mobile devices. However, because of their small size, they are not perfect.

For specific use cases like counting and receipt OCR, further fine-tuning is necessary. We will surely try to explore these in future articles.

Summary and Conclusion

In this article, we covered the inference code for the SmolVLM2 family of models. We started with a small discussion of the various model sizes available and jumped right into the code. We carried out image & video description, receipt OCR, and counting of objects. Along with that, we also discussed the drawbacks and how to mitigate them. I hope this article was worth your time.

If you have any questions, thoughts, or suggestions, please leave them in the comment section. I will surely address them.

You can contact me using the Contact section. You can also find me on LinkedIn, and Twitter.