In this article, we will focus on getting up and running with Ollama with the most common use cases.

Ollama is an application for running LLMs (Large Language Models) and VLMs (Vision Language Models) locally. The most basic usage requires a minimal learning curve and setting it up (on Linux) is one line of command.

In this article, we will solely focus on a very few and important things to get started with Ollama.

We will cover the following topics here:

- How to install Ollama?

- How to download a model using Ollama?

- Using text and chat models in Ollama.

- Passing multi-line prompts to models.

- Querying local documents using Ollama.

- Using VLMs (Vision Language Models) with Ollama.

- Removing models and freeing up GPU memory after exiting Ollama (!important).

The steps shown here are supported on a Linux system. Please refer to the official docs for running on Mac OS or Windows.

Note: In this article, $ represents a terminal command, and >>> represents Ollama prompts.

How to Install Ollama?

Installing Ollama on Ubuntu is quite straightforward. It’s just one line of command. You can execute the following command on the terminal to install Ollama.

$ curl -fsSL https://ollama.com/install.sh | sh

After installation, you will get a prompt on the terminal stating whether Ollama detects a GPU device or not. If not, then all the models will run on the CPU.

In case, you are running Ollama models on a CPU device, these are the official RAM requirements.

Note: You should have at least 8 GB of RAM available to run the 7B models, 16 GB to run the 13B models, and 32 GB to run the 33B models.

Ollama webiste

This completes all the necessary installation steps. Now, let’s jump into using our first model using Ollama.

How to Download a Model Using Ollama?

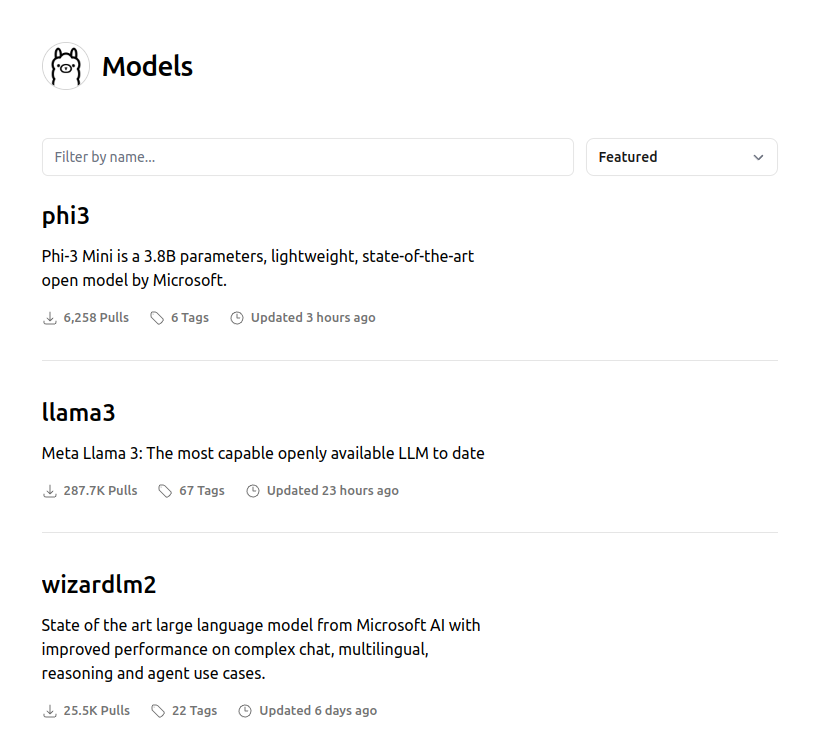

Downloading a model using Ollama is just as simple. It hosts numerous models including the latest Llama3 and Phi3.

You can click on the Models tab on the official Ollama website and check out all the featured models.

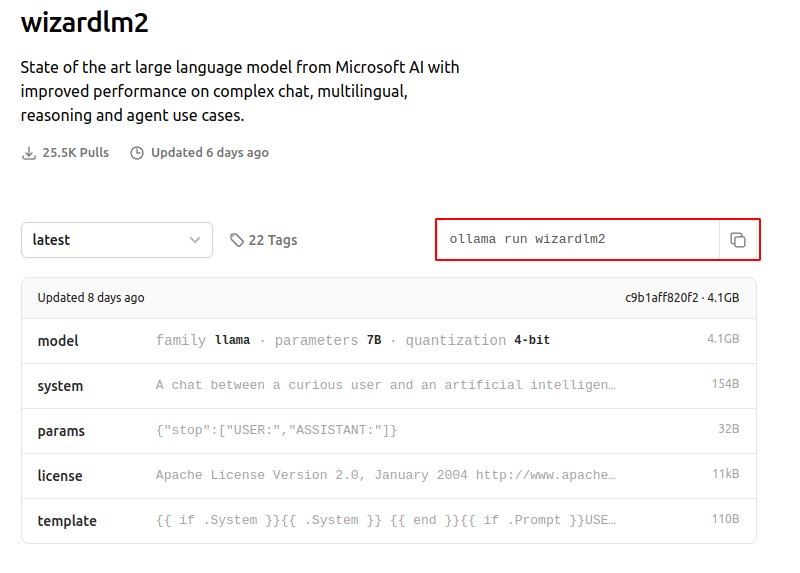

Let’s download the wizardlm2 model (not going into the details of the model at the moment). On every model page, you will see the following section containing all the model information along with the command to run it.

Let’s run the command.

$ ollama run wizardlm2

Running this the first time will download the model and its necessary artifacts.

Once complete, you will see the following prompt on the terminal.

>>> Send a message (/? for help)

This is the Ollama prompt where we can start chatting with the model. The model we have downloaded is a chat model. Let’s start chatting with it. Here are a series of chats.

>>> Hi. Hello! How can I assist you today? If you have any questions or need help with something, feel free to ask. >>> Tell me about LLMs in 200 words. Language Models (LMs) like Large Language Models (LLMs) are advanced AI systems designed to understand, generate, and sometimes translate human language. They are trained on vast amounts of text data, learning patterns, nuances, and structures of language. LLMs are a subset of LMs that are particularly large and complex, with parameters counting in the billions. This scale allows them to have a deep understanding of context, enabling them to perform a wide range of tasks without task-specific training, such as answering questions, summarizing texts, translating between languages, and generating human-like text. The most well-known LLMs include OpenAI's GPT (Generative Pre-trained Transformer) series, Google's BERT (Bidirectional Encoder Representations from Transformers), and others like T5 (Text-to-Text Transfer Transformer). These models are built using transformer architectures, which rely on self-attention mechanisms to weigh the influence of different parts of the input data differently. This allows the model to consider the full context of a sentence or paragraph when making predictions or generating responses. LLMs have significantly advanced natural language processing (NLP) capabilities, leading to improvements in machine comprehension and the ability to generate more coherent and contextually relevant text. They continue to be at the forefront of AI research and application, with ongoing developments aimed at enhancing their performance, reducing biases, and expanding their utility across diverse domains and languages. >>> Thanks. What are we talking about, again? We're discussing Large Language Models (LLMs), which are sophisticated artificial intelligence systems designed to process, understand, and generate human language. These models are a significant advancement in the field of natural language processing (NLP) and can perform a wide array of language-related tasks, from answering questions to writing essays or code. They are built using deep learning techniques, particularly transformer architectures that allow them to consider the context within large bodies of text. LLMs like GPT-3, BERT, and T5 have demonstrated remarkable language capabilities and continue to be a focus of research and development for expanding their understanding and application across different languages and domains. >>>

As we can see, it follows our commands and prompts quite well and even remembers the context. To exit the Ollama prompt, we can simply type /bye.

>>> /bye

This will take us back to the command line prompt.

Trying out Gemma

In short, Gemma is an open-source LLM by Google. It has two variants, a 7B model and a 2B model. We will focus on the Gemma2B here.

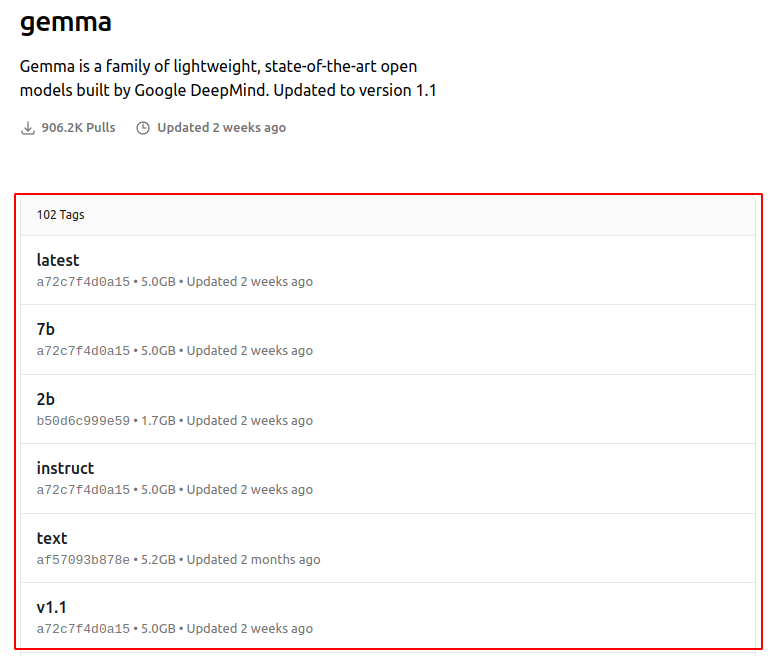

The Gemma page on Ollama shows this.

We can see the familiar model page along with a command to run the model. The default command will download the Gemma7B Instruct model. In general, instruct models and chat models are differently trained. However, in the case of Gemma, the instruct model is the same as the chat model. Along with that, we can also see 102 tags.

What are these tags? Clicking on it reveals the following.

We have models in several quantization formats for each, the Gemma2B and Gemma7B versions. Additionally, we can see something called text and instruct models.

In simple terms:

- Text models: Only complete the text.

- Instruct models: For chat and instruction.

Let’s download the text model and try it.

$ ollama run gemma:2b-text

When we do not provide any quantization format, it will download the INT4 quantized model by default.

Here are some chats from the downloaded model.

>>> Hi. I'm an award winning freelance photographer and have over 10 years experience in the photography world. I have shot everything from music, theatre, fashion, sport and commercial work. I'm also a huge fan of all things art related, music, design and fashion. I'm a huge lover of the outdoors and love to travel, I also love to run (half marathons) and play rugby. In 2018/2019 I was nominated for a World Press Photo Award and my work has been published in The Times, The Sunday Times, The Telegraph, The Guardian, The Metro, The Independent, The Daily Mirror, The Independent, The Evening Standard and more. I'm always available for commissions and I can work with you to make your project a reality. If you have any questions, or would like to collaborate please get in touch! >>> Send a message (/? for help)

Simply, the model starts completing the prompt that we provided.

Now, let’s try to run the Gemma2B instruct model.

$ ollama run gemma:2b-instruct

Here is the same chat as before.

>>> Hi. Hello! 👋 I'm happy to hear from you. What would you like to talk about today? >>> Send a message (/? for help)

It responds appropriately to our prompt.

In summary, we need to be careful which model we are downloading according to the use case. It is always better to check all the tags and model variants available before downloading a model.

As we discussed earlier, if the model download command does not contain any quantization information, then it is most likely downloading the 4-bit quantized model. If you want more accuracy (better responses to the prompts), you can also download the 16-bit quantized models. However, they will be larger in size, slower to run, and require more GPU memory.

As we have downloaded a few models now, we can also list all the models available with us using the following command.

$ ollama list

The output will be similar to the following.

NAME ID SIZE MODIFIED gemma:2b-instruct 030ee63283b5 1.6 GB 25 hours ago gemma:2b-text 4003359bdf67 1.7 GB 10 minutes ago llama3:8b-instruct-q4_0 71a106a91016 4.7 GB 44 hours ago llava:7b 8dd30f6b0cb1 4.7 GB 24 hours ago phi_1.5_oasst:latest 2bf713d229b1 962 MB 8 days ago qwen:0.5b-chat b5dc5e784f2a 394 MB 2 weeks ago qwen:1.8b b6e8ec2e7126 1.1 GB 10 days ago qwen_sft:latest a81a829bdf2f 1.1 GB 10 days ago wizardlm2:latest c9b1aff820f2 4.1 GB 25 hours ago tkdkid1000/phi-1_5:text 2978fbd5ba7b 918 MB 8 days ago

Using Multi-Line Prompts in Ollama

Until now, we have provided a single line prompt to Ollama. However, there will be numerous cases where will need to provide multi-line prompts to the model. We do so by enclosing the prompt within """. Here is an example.

>>> """

... Hi.

... Can you write a simple Python function to add two numbers.

... After that, write another function to subtract two numbers.

... Thanks.

... """

**Addition Function:**

```python

def add(a, b):

return a + b

```

**Subtraction Function:**

```python

def subtract(a, b):

return a - b

```

**Usage:**

```python

# Call the functions

sum = add(5, 7)

difference = subtract(10, 5)

# Print the results

print("Sum:", sum)

print("Difference:", difference)

```

After starting our prompt with """, we can keep on pressing the newline button on the keyboard and keep adding our prompts until we close it again with """.

Querying Local Files with Ollama

We can also query local files with Ollama. We can ask the language models questions about any readable file. However, the functionality is not available through the Ollama prompt (>>>). Rather we need to use the command line substitute for it. Here is an example using the Gemma2B instruct model.

$ ollama run gemma:2b-instruct What is this file about "$(cat NOTES.md)"

The above command has the following parts:

- The command to run the Ollama model:

ollama run gemma:2b-instruct - Next, the prompt:

What is this file about - Finally, the path to the file with the concatenation command:

"$(cat NOTES.md)"

This will simply throw the content of the file to the model which the model engages with as a context. We get the following output for the above command.

**File:** NOTES.md **Summary:** The file provides instructions and examples for using the Ollama language model. It includes information on installing and using different models, including text, instruct, and multi-line prompts. **Key Points:** - Installation instructions for Ollama. - Different model options: text, instruct, and multi-line prompts. - Accessing model information and examples. - Using VLMs (Vector Language Models). - Exiting and deallocating GPU memory. - Removing models from the collection. **Purpose:** - To provide users with comprehensive information on using the Ollama language model. - To facilitate exploration of different models and prompts. - To allow users to customize their experience based on their preferences.

The output is entirely correct. The Markdown file contains the instructions to use Ollama. You can try it on your own local files as well and check how the model behaves.

Using VLMs (Vision Language Models) with Ollama

Ollama also supports the most popular multimodal VLMs. One of them is Llava. Let’s see how to use that.

First, we need to download and run the Llava 7B 4-bit quantized version.

$ ollama run llava:7b

This is the image we will query the Llava model about. We can do that directory from the Ollama prompt.

This is the image that we are going to query Ollama about.

And here is how we can prompt Llava using Ollama.

>>> What does this image show ./image_1.jpg

The prompt should contain what we want from the Llava model and that path to the image. You can also provide an absolute path to the image.

>>> What does this image show /home/sovitdl/my_data/images/image_1.jpg

This is what we get as output from Llava.

Added image './image_1.jpg' The image shows a dog standing on the beach near the water's edge. The sky is partly cloudy, and it appears to be either sunrise or sunset given the soft light in the background.

The model is able to get the overall idea of the image, however, not entirely accurate.

Removing Models from Ollama

There will be times when we will want to delete a specific model from Ollama. The steps are quite simple. It needs to be a terminal command and the following shows removing the Gemma 2B text model.

$ ollama rm gemma:2b-text

We use the ollama rm command and provide the exact name of the model. We can get the names of all the downloaded models using ollama list.

Freeing Up RAM And/Or GPU Memory

One issue with Ollama is that even if we type /bye after using a model, the memory (either GPU or RAM) does not get entirely released. It will be released to some extent after around 5 minutes of quitting the chat. However, most of the time, a system restart is necessary. Alternatively, there are ways to free up any resources from Ollama. We just need to stop the Ollama services entirely.

We can do so using the following command.

$ sudo systemctl stop ollama

This will ask for the system password that we need to provide and will terminate all the Ollama services resulting in freeing up any occupied memory.

After this, none of the Ollama commands will work. We can again execute the following to start the Ollama services.

$ sudo systemctl start ollama

This allows us to again use Ollama normally.

Summary and Conclusion

In this article, we had a brief overview of some of the most common functionalities and commands of Ollama. We started with downloading Ollama, using chat models, and VLMs, deleting models, and even freeing up resources properly. In future posts, we will see how to add our own model to Ollama. I hope that this article was worth your time.

If you have any doubts, thoughts, or suggestions, please leave them in the comment section. I will surely address them.

You can contact me using the Contact section. You can also find me on LinkedIn, and X.